Cloud serverless architectures became more and more popular in the past years, and many enterprises started leveraging it and even migrating some of their workloads from traditional architectures to ephemeral event-driven architecture.

In this article we are going to discuss:

- Major benefits brought by serverless architecture.

- Important rules and caveats when deciding to go serverless.

- Serverless tooling.

- Integration patterns between serverless components.

- Serverless scheduling.

- How to notify asynchronous processing progress to frontend apps?

So put your seat belts on and let's begin!

Serverless architecture advantages:

The transition from traditional architectures to a cloud serverless architecture comes with many advantages such as:

-

Reduced cost – on-demand pricing model of serverless means that you pay per function execution which is much more efficient than paying for servers fixed price even if they're not used.

-

Scalability – serverless architecture is great for an unpredicted load and it is easy to scale when needed. In cases when a new feature becomes suddenly popular, the serverless providers provides scale-up and scale-down capabilities automatically. So no need to worry or prepare upfront.

-

Fault tolerance – the objective of creating a fault-tolerant system is to prevent disruptions arising from a single point of failure, ensuring the high availability and business continuity of mission-critical applications or systems. A system based on serverless architecture will continue operating without interruption when one or more of its components fail.

Rules and caveats before going serverless

Single Responsibility

Deriving from the Software Quality Single Responsibility Principle (SRP), each serverless component must be non-trivial and have a unique responsibility. For instance, a shopping store application can be split on multiple serverless components:

- Component that takes care of the authentication/authorization and users accounts management.

- Component for managing products and categories.

- Component for processing payments and refunds.

- Component for managing users shipping/billing addresses and tracking numbers.

- etc.

This separation of roles is mandatory, and it is the most important rule driving the transition as it ensures building a fault tolerance architecture and when something goes wrong we can easily identify the affected component and instead of patching and redeploying the entire application we can redeploy only the affected component.

Going serverless is not always budget friendly

If you think serverless will always save you money then you are certainly wrong. In some use cases it can be more expensive than a non-serverless approach, and this is the case for applications with a "predictable steady heavy workloads".

It will not be suitable to deploy these applications as Lambda functions because they have a steady workloads, and they

will always be busy and deploying them as a Lambda will cost you more than deploying them as a service on ECS or

Kubernetes.

A calculator tool can help you make that decision, you can just provide it with the number of executions per month,

average request duration, memory allocated and it will give you insight about the average monthly spent.

Do the same for ECS and eventually go with the cheaper one.

As a rule of thumb, for periodic or very light workloads, Lambda is dramatically less expensive than ECS, and for steady high workloads, instances are preferable.

Opt for event-driven messaging between system components

Try to opt for event-driven communication between system components as much as possible, and instead of sending a

request from a component to another component and synchronously wait for the reply it's more preferable to send the

event to an integration service like SNS, SQS, or EventBridge and let the other system components make the

decision to process the event or ignore it.

A message is a packet of data. An event is a message that notifies other components about a change or an action that has taken place.

Avoid FaaS platform cold starts

When invoking a Lambda function, an execution environment is provisioned in a process commonly called a Cold Start.

This initialization phase can increase the total execution time of the function. Consequently, with the same

concurrency, cold starts can reduce the overall throughput.

The cold starts depends on many factors:

-

Function runtime - or the language used for lambda function code. Java and dotnet runtimes take more time to bootstrap than nodejs and python runtimes. This is why nodejs is the most used runtime with Lambda.

-

Function network configuration - whether it needs to connect to VPC resources or not. A vpc-connected Lambda will take more time to bootstrap than a non-VPC Lambda.

-

Deployment package size - when the artifacts get much bigger, so does the cold start latency. So, avoid any unnecessary files packaged into deployment package, like docs, tests, builds, images... and use a storage service like S3 to store these files and download them as needed.

You can avoid cold starts by making sure your Lambda functions are always warm, so subsequent events can reuse the instance of a Lambda function and its host container from the previous event. This can be done with two approaches:

-

Cloudwatch scheduled event rule - a hacky approach using cloudwatch scheduled event rule to invoke a lambda function X times concurrently each 5 minutes, to make sure X lambda instances are always warm and ready.

-

Provisioned concurrency - This is the AWS official approach, allowing more precise control over start-up latency when Lambda functions are invoked. When enabled, it is designed to keep your functions initialized and hyper-ready to respond in double-digit milliseconds at the scale you need.

Keep your infrastructure version controlled

Unlike traditional architectures, by following a serverless architecture you will end up with a lot of infrastructure resources and configurations for each component. If you choose to manage those resources manually from cloud provider console, it will be the biggest nightmare for you and your team.

It's advisable to use an Infrastructure as a Code (IaC) tool to version control your infrastructure, plan and

apply resources changes, track changes made by your DevOps team to give you a clearer visibility over your current

infrastructure state.

There are multiple IaC tools you can use, the most famous ones are Terraform, Pulumi,

Serverless, and AWS Cloudformation. Terraform is my favourite one and the one we are using at

OBytes, and we highly recommend it. Sorry AWS, but we prefer Terraform.

Avoid DoSing yourself

Serverless providers like AWS often enforce some limits for some services like Lambda, and sometimes the limit is

applied across the entire AWS account. For example, AWS enforces a limit of 1,000 concurrent function executions over the entire AWS account.

Imagine a project using the same account for QA and Prod and a developer who wants to conduct a stress test

that invokes 1,000 concurrent functions on QA, he will accidentally DoS production application.

So always keep an eye on limits and utilization, use different AWS accounts for QA and Prod and migrate applications with high workloads from a FaaS approach to a long-running containerized application or implement a queuing pattern to do the load leveling.

Standardise message format

Use a standard events message format across your serverless components, publishing events in a non-standard format can sometimes crash one of your components expecting the message in another format.

This is an example of a standard event message that contains the event type, event source and event details:

{

"eventSource": "payment-service",

"eventType": "PAYMENT_SETTLED",

"eventDetails": {

"customer_id": 666,

"payment_id": "123456789987654321",

"amount": 12,

"currency": "USD",

"fee": 0.12,

"settlement_time": 1631380398

}

}

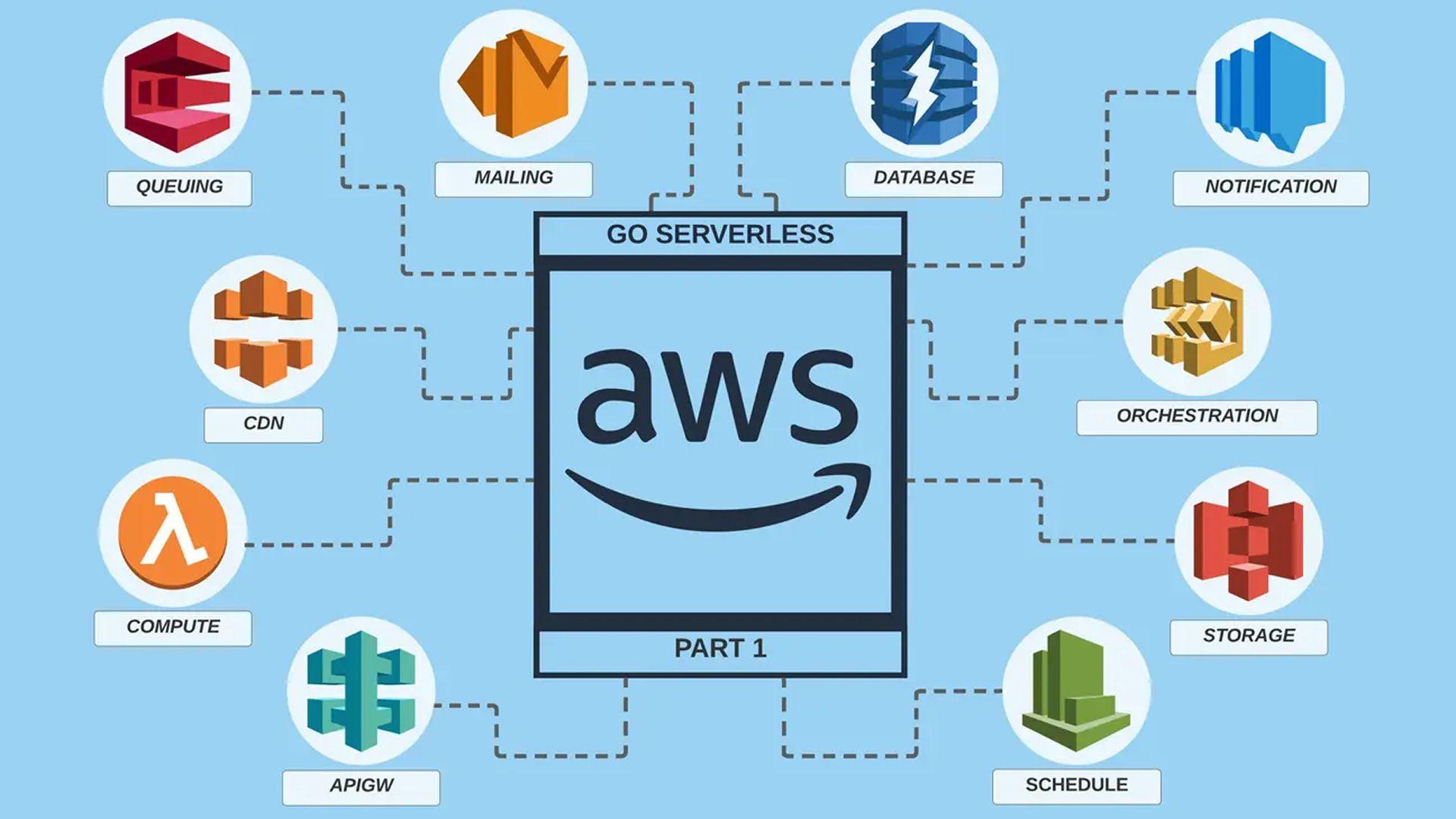

Serverless Tooling

There are plenty of services to use for building serverless applications, I usually build serverless applications using AWS so all the services below are AWS products:

- Backend and Compute - AWS Lambda, AWS Fargate Tasks, AWS Glue.

- Frontend and CDN - AWS Simple Storage Service (S3), AWS Cloudfront.

- Integration & Orchestration - AWS EventBridge, AWS Simple Notification Service (SNS), AWS Simple Queuing Service (SQS), AWS IoT, AWS Step functions.

- Database - AWS Aurora Serverless, DynamoDB.

- API Gateways - AWS HTTP API Gateway, AWS WebSockets Gateway.

- Continuous Integration - AWS Codebuild, AWS Codepipeline, AWS CodeDeploy.

- Infrastructure as Code - Terraform, Pulumi, Serverless.

In case you prefer Azure or GCP, they offer good alternatives for almost all services above.

Integration patterns between serverless components

There are multiple integration patterns you can use to ensure resilient communication between serverless components, most of them are based on the parent event-driven messaging pattern and do not follow the synchronous request-reply messaging pattern.

Asynchronous Request-Reply pattern

Decouple long-running backend processing from a frontend client, where backend processing needs to be asynchronous, but the frontend still needs a clear response.

Problem

A platform that receives requests to process a resource, and that background processing is executed in minutes or even hours, but the users wants to get the realtime progress of the processing and to be notified when the processing is done.

So how is it possible for the users to track the progress of the processing? In a synchronous request-reply messaging pattern the user can send that resource to be processed and wait for the response, but in this case, the processing

can take hours and there are no API Gateway or Load Balancer that can keep the connection alive for hours,

and Most load balancers have an IDLE Timeout of 1 hour. and AWS API gateway timeout has a hard timeout limit of

30 seconds.

Solution

Having a synchronous request-reply pattern will not work, so we need to decouple backend processing from the frontend application, where backend can take its time processing the resource asynchronously through different serverless components.

However, the frontend still needs a clear response, so the backend sends an acknowledgment reply with a Valet Key

which is a unique key for the resource being processed.

The frontend application now has the unique resource's valet key, but how can it get the progress of the processing?

This can be done using HTTP polling. Polling is useful to client-side code, as it can be hard to provide

call-back endpoints or use long-running connections.

Even when callbacks are possible, the extra libraries and services that are required can sometimes add too much extra complexity. For example:

- Websockets - using AWS Websocket API Gateway.

- AMQP's topics system - using AWS IoT broker.

We will discuss these alternative more closely at the end of the article.

Example: Glue - Long-Running DB Refresh Workflow

This is an example of a long-running Glue Workflow that the user triggers using /start API Gateway route.

The workflow will take a long time to finish because it will take a snapshot of the production db, drop the QA db,

restore QA db from production snapshot and finally sanitize the DB.

The frontend cannot wait that in a single synchronous request-reply, so the backend acknowledge the processing and

returns the Valet Key at the end of /start request which is the glue workflow run id, and it provides a

/status endpoint in order for the frontend application to use it for HTTP polling.

Glue can continuously run and update the workflow status when there is progress, and for each 10 seconds AKA polling interval, the frontend application will poll the status of workflow from glue through API gateway and Lambda function.

Queue-Based Load Leveling pattern

Using a queue that acts as a buffer between the job, and the component that should process it in order to throttle heavy loads that can cause the processing component to crash, or the job to time out.

Problem

Imagine a use case where there are two services coupled together with a synchronous request-reply messaging pattern,

Service A is generating and sending tasks to Service B at a variable rate, so it's difficult to predict the

volume of requests to Service B at any time, In the other hand and historically, Service B can process tasks

at a known consistent rate.

What happens if there is a burst in tasks generation in Service A, and the tasks rate exceeded the consistent rate

that Service B can handle? Service B will experience peaks in demand that cause it to overwhelm and be

unable to respond to Service B requests in a timely manner.

Solution

Introducing a queue between Service A and Service B, messaging between the two services will become

asynchronous and service A will be entitled as a task's producer and service B will become a task's consumer.

The queue will act as a tasks buffer between the two components and will help level the load from service B, so it can

poll the number of tasks that it can handle without causing it to crash, the number of polled tasks at once is known in

queuing systems as the prefetch count.

Example: SQS - Time Limited Voting System

This is an example of a global voting system restricted by a time limit which is 1 hour.

- The voting must open at 6pm and close at 7pm.

- There are millions of users that will participate in the voting.

The Lambda function which is responsible for persisting votes to database has a concurrent execution hard limit of 1,000, however there can be more than 1,000 concurrent users at that time regarding the high number of users and the time limit. adding to that.

In order to prevent our system from being overwhelmed by too many voting requests. we are going to place an

SQS Queue in front of the voting lambda, and we will configure the voting lambda to consume from SQS queue.

The number of messages that an Amazon SQS queue can store is unlimited. and standard queues support a

nearly unlimited number of API calls per second, per API action (SendMessage, ReceiveMessage, or DeleteMessage), so we can use SQS.

The users can't directly SendMessage to SQS queue without an API, so we have to integrate SQS with API Gateway but what are the throttling limitations enforced by API Gateway?

The Default limit on requests made to API Gateway is 10,000 requests per second (RPS), but fortunately it's a soft limit, and it can be raised easily by contacting AWS support.

There will be no duplicate votes because a user's old vote will be replaced by the same user new vote if he decided to vote twice. This is just a proof-of-concept, a good voting system is normally built using blockchain technology.

Publisher-Subscriber pattern

This is the most basic pattern, it enables a publisher application to announce events to multiple interested consumers asynchronously, without coupling the sender to the receivers.

Problem

In a serverless distributed system, components of the system needs to notify other components as events happen.

Asynchronous messaging using queue pattern is an effective way to decouple senders from consumers, and avoid blocking the sender to wait for a response. However, there are a set of limitations:

- If there are significant number of consumers, using a dedicated message queue for each consumer will not scale effectively.

- Some of those consumers might be interested in only a subset of the events.

- There is no way for a sender to announce events to all interested consumers without knowing their identities.

Solution

A Publisher-Subscriber pattern can fix these limitations, and it can be implemented using a messaging broker

or an Eventbus:

In the case of a messaging broker:

- The topic is created in the brokers level.

- Subscribers connect to the broker and select the topics to subscribe to.

- The publisher connects to the broker and publishes the event to target topic.

- The broker notifies all subscribers about the received event.

In the case of eventbus:

- An eventbus where the events will be sent is created.

- A rule is created to filter and send events to targets.

- The publisher sends the event to the target eventbus.

- If the rule is matched, the eventbus will trigger the target consumers with the event as payload.

The services you can use to implement this pattern are:

- AWS EventBridge

- AWS Simple Notification Service (SNS)

- AWS Internet of Things (IoT)

Example: SNS - Users Registration Process

An example of the Publisher-Subscriber pattern can be illustrated in a registration system where the registration component can publish the registered user to an SNS topic and other components can subscribe to registration topic.

For instance, the analytics component can subscribe for calculating demographics, the mailing component can subscribe for sending a welcome email to the new user, and the on-boarding component can subscribe for further registration steps.

Sequential Convoy pattern

Process a set of related events in order, without blocking the processing of other groups of events.

Problem

Some applications need to process events in the order they arrive, but the problem is that they can't scale to handle a lot of events.

Solution

Use two FIFO Queues , the first ungrouped queue receives the events, sends them to a processor that tag the related

events with same group id and send them to the second grouped FIFO queue.

Finally, the consumers can process each group in sequence and in the same time process many groups in parallel.

Example: SQS - Ledger

In a ledger system, operations must be performed in a FIFO order but only at the order level. However, the ledger receives transactions for multiple orders.

One requirement of a ledger system is that it should be fast, so if we choose to use a single FIFO queue to receive

all orders transactions, and a consumer to process orders transactions in order, orders transactions will block each

others, and the ledger will not be fast to process multiple orders at the same time. Adding to that with this approach

we should have only one consumer or else the orders transactions will not be processed in a FIFO manner. and we will not

have competing consumers to process transactions faster.

Following the sequential convoy pattern we can have these components:

- Ledger queue - which is a FIFO non-grouped queue that accepts all orders transactions.

- Ledger processor - process ledger's incoming transactions in order, it's fast because it only saves the transaction to the ledger, tag the transaction with its order ID and send it to the second FIFO queue.

- Transactions queue - unlike the first queue, this is a grouped FIFO queue, which means that FIFO is applied to group level.

- Orders consumers - competing consumers that can process the transactions queue, but the transactions of each order are processed in a FIFO manner.

Claim-Check pattern

Protecting event bus from being overwhelmed by large messages by splitting the message to a Claim Check and an

actual Payload, the claim check is sent to the event bus and the payload is stored in a database.

Problem

Messaging services such as event bus are fine-tuned to handle large quantities of small messages, but they perform poorly with large messages and even some messaging services enforces limits on message size to not hurt the overall performance of the service.

Solution

Splitting the massage into a claim check and an actual payload, send the claim check to the event bus and store the payload in a database, services interested in the large message can subscribe to the event bus and use the claim check to retrieve the actual payload from the database.

Example: Avatars Resizing

The use case here is to optimize users avatars, usually users pictures are taken by cameras in high quality, but we need to make them web ready pictures.

Users will upload their avatar through API gateway, we can just integrate API gateway with the lambda function responsible for images resizing but that will not work, why?

The maximum payload size for Lambda function is 6mb, so a user cannot upload an avatar that exceeds that size and not all users know how to resize an image.

So instead of sending the binary image from API Gateway directly to Lambda we can integrate the API Gateway with S3

using PutObject operation and setup S3 notification to notify the lambda function about the new object.

After a successful upload, S3 notification will just send the S3 key of the original image which is acting as a claim

check to the lambda function, and the lambda function can download the avatar, do its magic and upload the resized

avatar.

In our case, the Lambda function uses the same bucket that triggers it, it could cause the function to run in a loop. If the bucket triggers a function each time an avatar is uploaded, and the function uploads the resized avatar to the bucket, then the function indirectly triggers itself. To avoid this, we should configure the trigger to only apply to the prefix

/uploadsused for incoming avatars.

Pipes and Filters pattern

Split a component that performs a complex processing into multiple components that can be reused.

Problem

Imagine we have two monolith components A and B that do almost the same thing but component B does extra two jobs at the start and at the end of the processing.

The components have a shared processing steps, and the same code for those steps, what happens if we need to change the code for the shared steps?

Correct, we will have to refactor the code in both components. This is just the case for shared processing steps between just two components, what if the steps are shared between tens of components? Any refactoring to a component will have to be replicated over all other components, and this will discourage developers from optimizing the code knowing that they will face a nightmare refactoring all those components.

Solution

As discussed earlier in the article, a basic solution is to follow the Single Responsibility Principle by

decomposing the monolith components with almost similar processing steps into multiple small components aka

Filters, and build a workflow with them to construct the original processing steps of the monolith components

using branches aka Pipes.

This will improve reusability, and scalability by allowing components elements that perform the processing to be deployed and scaled independently.

Services that can be used for this pattern are:

- AWS Glue

- AWS Step Functions

- AWS CodePipeline

Example - DB Refresh Pipelines

In this use case, we have two components that need to refresh QA DB from Production DB:

- Component A: refreshes QA payments DB and sanitize the refreshed DB.

- Component B: refreshes QA core DB and sanitize the refreshed DB, and it does two more steps which are seeding fixtures data and dumping the sanitized DB for local development.

The two components share steps like restoration step and sanitization step, in order to make those steps reusable across the current components and any other future component that will need the same implementation we have decomposed the components steps into 4 Glue Jobs (Filters), Restorer Job, Sanitizer Job, Fixtures Job and Dump Job.

Finally, we have reconstructed the original monolith components using Step Functions (Pipes)

Serverless Scheduling

Instead of spinning a long-running instance like celery beat just to schedule jobs you can use serverless

scheduling solutions like EventBridge and DynamoDB TTL, and they will ensure that the job runs at the

specified time.

EventBridge Scheduling

EventBridge rules can be used to trigger a target component on a schedule, the schedule is controlled by a cron expression.

Example: Monthly Invoicing App

In this example we have an EventBridge rule that will trigger the invoicing lambda every month.

DynamoDB TTL scheduling

DynamoDB has two features that can be combined to implement scheduling:

-

Item TTL attribute - Amazon DynamoDB Time to Live (TTL) allows you to define a per-item timestamp to determine when an item is no longer needed. Shortly after the date and time of the specified timestamp, DynamoDB deletes the item from your table.

-

DynamoDB Streams - it captures a time-ordered sequence of item-level modifications in a DynamoDB table, those modifications events can be set to trigger a services like Lambda.

So we can have an item with an expiration date. Shortly after the date, DynamoDB will delete the item and will create a DeleteItem stream that will invoke a lambda function.

Example: Accounts Banning System

We can use this pattern to implement a banning system, where users can report other users posts, the reports are analysed using a classification lambda and if the claim is true, the classification lambda can send a deactivation event with deactivation reason and user account id to an SNS topic.

When account management lambda receives the event, it deactivates the account and adds the account to BannedAccounts dynamodb table with a TTL for when the account should be reactivated.

Shortly after TTL expiration, dynamodb deletes the items and create a stream for delete operation, and then lambda receives the stream and reactivate the account.

Notify asynchronous processing progress to frontend apps?

As discussed in the Asynchronous Request-Reply pattern section, we can use HTTP polling to periodically check for

progress but this approach comes with many drawbacks:

-

Polling is much more resource intensive on our resources, if there are thousands of users doing the long polling against our API Gateway, you will be surprised by AWS bills at the end of the month.

-

It is possible for multiple HTTP requests from the same clients to be in flight simultaneously. For example, if a client has two browser tabs open consuming the same server resource.

-

There can be a delay equal to the polling interval, so the processing can be done, but the status is still not updated in the frontend side.

So there are two alternatives to the HTTP polling approach: Websockets and Telemetry with MQTT.

Websockets

Opening a websocket connection with API gateway, subscribe to a long-running job and let the job notify the subscribed clients using their connection ids whenever there is progress.

This approach has multiple advantages:

-

WebSockets keeps a unique connection open while eliminating latency problems that arise with Long Polling and the progress will be notified as soon as it has been made.

-

WebSockets generally do not use XMLHttpRequest, and as such, headers are not sent every-time we need to get more information from the server. This, in turn, reduces the expensive data loads being sent to the server.

Example: AWS WSS Gateway - Stock explorer application

A stock explorer application is a perfect example for websockets approach because traders want to know stocks price in realtime or else they will lose money.

-

First, the traders connect to AWS WSS Gateway so for each connection request, WSS gateway adds the trader

Connection IDto a dynamodb table. -

The trader want to receive realtime price for

S&P500stock, so he sends a subscription request to WSS Gateway, and the lambda function adds the user/stock subscription to subscription dynamodb table. -

On each price change on S&P500, stocks service will get the list of traders subscribed to that stock and then it retrieves the connections ids for each subscribed trader, and finally notifies them through WSS gateway.

MQTT: Topics and Telemetry

The major downsides of using the websocket approach are:

-

Users connections tracking overhead - as of now there is no way to track users connections using only API Gateway, and we had to add a dynamodb table to track each connection.

-

Users notification is difficult - there is no way to send a message to multiple connections at once, and we have to get all users connections and loop through them, we can use Async IO Requests to process notifications rapidly but that will not scale if there are millions of connections.

Instead of using a low level websocket approach we can use a high level protocol which is MQTT, by using MQTT

instead of notifying the connections we can use a topic system, users can subscribe to a topic or multiple topics

and when we publish events to a topic the MQTT broker will take care of that and will make sure to notify all subscribed

users.

The advantages of using a topic system with MQTT are:

- Ability to distribute events more efficiently.

- Unlike websocket approach, thanks to Telemetry feature it can reduce network bandwidth consumption dramatically.

- Reduce notifications rates to seconds.

- Increase scalability: MQTT can scale to millions of users.

- Saves development time.

Example: AWS IoT - Soccer Fixtures Key Events Notifier

Last example is dedicated to soccer fans, a soccer fixtures key-events notifier is a good example where we can

implement MQTT topic system.

The first thing to think about when building a realtime feature using MQTT is topics architecture and how we are going

to structure our topics. For this example, we will consider a topic as a fixture between two teams at a certain date,

so the topics name format will be TeamA-TeamB-FixtureDate

Our api gateway is receiving soccer fixtures key events from a third-party service as webhooks. The frontend application does not usually have endpoints to register for webhooks in the third-party service. That's why we are building a solution that will let soccer fans receive fixture key events.

Fans will have to subscribe to their favourite teams fixtures MQTT topics, or they can choose their favourite teams and the system will automatically subscribe them to all related fixtures.

For each received key event from third party soccer our lambda will be triggered and will publish the event to the

related fixture's topic, and eventually AWS IoT Broker will send the key event to all subscribed fans.

What's next

In the next part, we will discuss how we can decouple Lambda code/dependencies CI from Terraform and delegate it to Codebuild/Codepipeline.

Share the article if you find it useful! See you next time.