In the previous article, we've promised you to discuss how the web application for the websocket stack was deployed. cheers, because this will be the topic of our article today.

TL;DR

We're going to build a set of reusable Terraform modules to help you quickly deploy, preview and serve public static web applications and public/private media. The modules will be used to achieve the same capabilities provided by Netlify, and also supporting private pre-signed URLs on top of that, using only AWS services.

The modules we are going to build are:

-

Terraform AWS CI: Reusable Terraform module for continuous integration of static web applications deployed on S3 and served by cloudfront. -

Terraform AWS Certify: Reusable Terraform module for provisioning and auto-validating TLS certificates for one or multiple domains using AWS Certificate Manager. -

Terraform AWS CDN: Reusable Terraform module for deploying, previewing and serving public static web applications and public/private media.

The

Terraform AWS CDNis the parent Terraform module and it's usingTerraform AWS CIandTerraform AWS Certifyas helper modules.

The components we are going to provision are:

-

ACM Certificate: a managed ACM SSL/TLS certificate (to be attached to all cloudfront distributions). -

Policies: a cache optimized policy, an origin request policy and a response headers policy to improve performance and secure content (to be attached to all cloudfront distributions). -

URL Rewrite Function: a viewer request cloudfront function for url re-writing and routing the wildcard preview domain requests to the target preview content (to be attached to all cloudfront distributions). -

Cloudfront Signer: the cloudfront public key and key group to be used for verifying pre-signed urls (to be attached to the private media cloudfront distributions). -

Main Application CDN: a public S3 bucket behind a cloudfront distribution that serves the public static web application. -

Unlimited Previews CDN: a public S3 bucket behind a cloudfront distribution to preview Github feature branches that has an open PR (Like Netlify). -

Private Media CDN: a private S3 bucket behind a cloudfront distribution to serve private media using Cloudfront pre-signed URLs (i.e. invoices, bank statements ...). -

Public Media CDN: a public S3 bucket behind a cloudfront distribution to serve public media (i.e. avatars ...) -

Release CI: CI/CD pipeline to deploy the static web application to the public S3 Bucket. -

Preview CI: CD/CD build to deploy the static web application feature branches to the preview S3 Bucket. -

DNS Records: a DNS record for each cloudfront distribution.

Features

The reusable terraform modules will provide these capabilities:

✅ Improve application performance by delivering content faster to viewers using smaller file sizes. Thanks to

Brotli and GZip compression

✅ Reduce the number of requests that the origin S3 service must respond to directly. Thanks to CloudFront caching and the cache optimized policy, objects are served from CloudFront edge locations, which are closer to your users.

✅ Provide a response headers policy to secure your application’s communications and customize its behavior. with

CORS to control access to origin objects, and Security Headers to exchange security-related information to

browsers.

✅ Enforce URL re-writing rules to add the trailing slash, add missing index.html filename and preserve query

string during redirects to make sure the CDN works for single page applications and statically generated websites.

✅ Routing the preview requests to the target S3 preview content based on the host header by leveraging the wildcard domain and the viewer-request cloudfront function.

✅ Ability to conditionally provision the main, preview and media CDNs components using the enable Terraform map

variable.

✅ Protect and serve private media using Cloudfront Signed URLs.

✅ Improving the search rankings for web application and help meet regulatory compliance requirements for encrypting

data in transit by acquiring and auto validation of an SSL/TLS Certificate for main/preview/media CDNs domains.

✅ Auto provision the DNS records for the main, preview, and the public/private media CDNs.

✅ Support Route53 and Cloudflare as DNS providers (If you want to use another DNS provider, you can call

the modules components directly).

✅ Support for mono repositories, by providing the ability to change the project base directory using app_base_dir

Terraform variable.

✅ Supports any package manager, you only need to specify the installation command to use for fetching dependencies

using the Terraform variable app_install_cmd (i.e. yarn install, npm install...).

✅ Ability to change the build command using the Terraform variable app_build_cmd (i.e. yarn build,

gatsby build...).

✅ Supports any static web application generator (React, Gatsby, VueJS), you only need to provide the build folder to be

deployed using the app_build_dir (i.e. build for react and public for gatsby).

✅ Ability to change the runtime node version using the app_node_version Terraform variable.

✅ Trigger the release pipeline based on pushes to Github branches or based on tag publish events, by setting the

Terraform variable pre_release to false or true.

✅ Support custom DOT ENV variables by leveraging AWS Secrets Manager. the CI module will output envs_sm_id that

you can use to update the secrets' manager secret version.

✅ On each started, succeeded and failed event, the CI pipeline can send notifications to the target

Slack channels provided by ci_notifications_slack_channels Terraform variable.

✅ To improve the performance of the builds and speed up the subsequent runs, the pipeline can cache the node modules to

a remote S3 artifacts bucket s3_artifacts at the end of the builds and pull the cached node modules at the start

of each build.

✅ After a successful deployment, the CI pipeline will use the cloudfront_distribution_id to invalidate the old web

application files from the edge cache. The next time a viewer requests the web application, CloudFront returns to the

origin to fetch the latest version of the application.

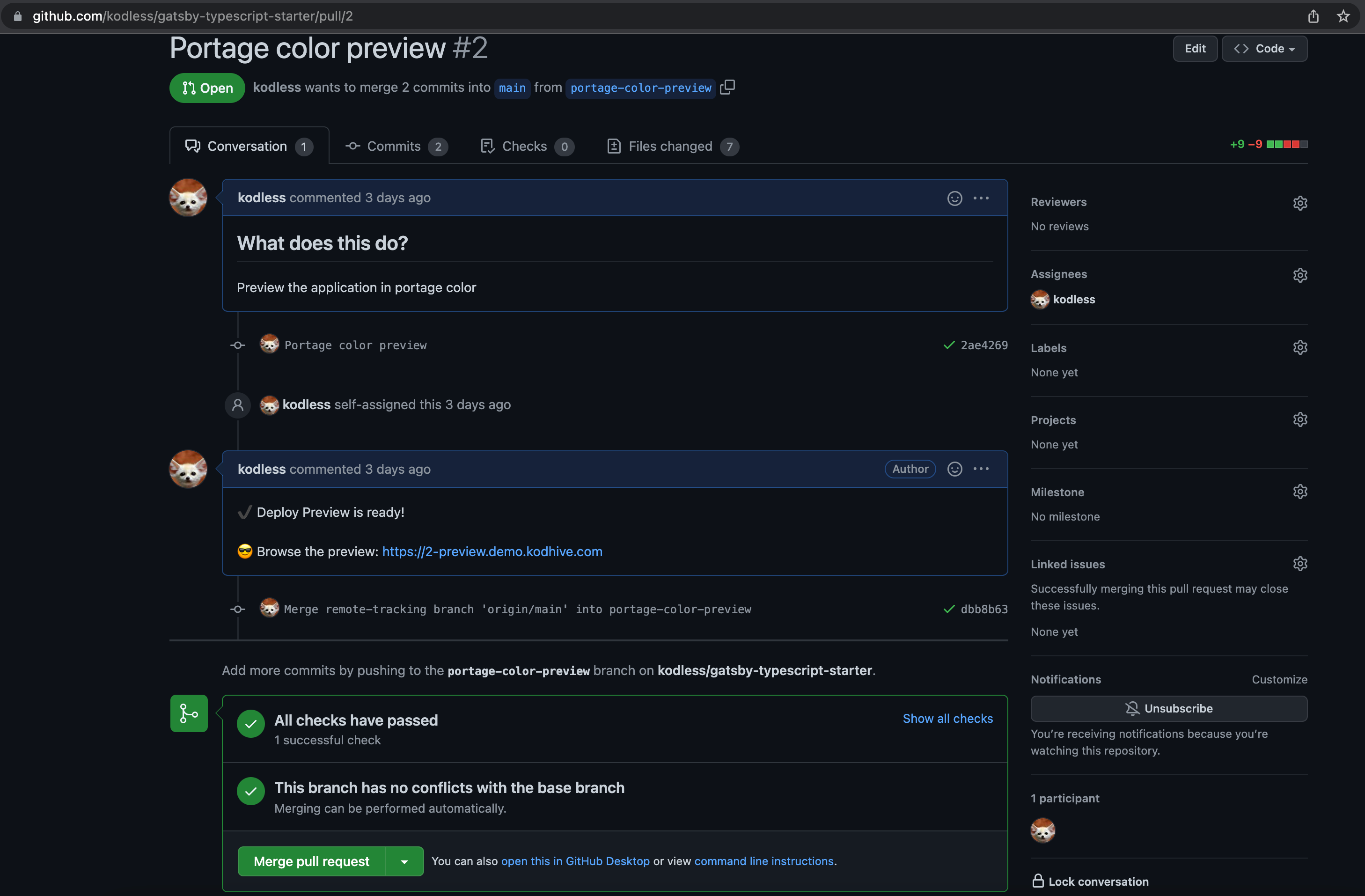

✅ Notify the preview build completion and the preview URL to Github users by commenting on the target Pull Request that triggered the Build.

Usage

For this example we will provision the main and preview CDNs for a Gatsby Starter Application, and a public/private

media CDNs using the main Terraform Module terraform-aws-s3-cdn

module "demo_webapp" {

source = "git::https://github.com/obytes/terraform-aws-s3-cdn.git//modules/route53"

prefix = "${local.prefix}-demo"

common_tags = local.common_tags

comment = "Demo wep application"

enable = {

main = true

preview = true

public_media = true

private_media = true

}

dns_zone_id = aws_route53_zone._.zone_id

main_fqdn = "demo.kodhive.com"

public_media_fqdn = "demo-public-media.kodhive.com"

private_media_fqdn = "demo-private-media.kodhive.com"

media_signer_public_key = file("${path.module}/public_key.pem")

content_security_policy = "default-src * 'unsafe-inline'"

# Artifacts

s3_artifacts = {

arn = aws_s3_bucket.artifacts.arn

bucket = aws_s3_bucket.artifacts.bucket

}

# Github

github = {

owner = "obytes"

token = "Token used to comment on github PRs when the preview is ready!"

webhook_secret = "not-secret"

connection_arn = "arn:aws:codestar-connections:us-east-1:{ACCOUNT_ID}:connection/{CONNECTION_ID}"

}

pre_release = false

github_repository = {

name = "gatsby-typescript-starter"

branch = "main"

}

# Build

app_base_dir = "."

app_build_dir = "public"

app_node_version = "latest"

app_install_cmd = "yarn install"

app_build_cmd = "yarn build"

# Notification

ci_notifications_slack_channels = {

info = "ci-info"

alert = "ci-alert"

}

}

- Initialize the secrets manager secrets that will be used during CI process to populate the

.envfiles

resource "aws_secretsmanager_secret_version" "main_webapp_env_vars" {

secret_id = module.demo_webapp.main_webapp_envs_sm_id[0]

secret_string = jsonencode({

GATSBY_FIREBASE_API_KEY = "GATSBY_FIREBASE_API_KEY"

GATSBY_FIREBASE_PROJECT_ID = "GATSBY_FIREBASE_PROJECT_ID"

GATSBY_FIREBASE_AUTH_DOMAIN = "GATSBY_FIREBASE_AUTH_DOMAIN"

})

}

resource "aws_secretsmanager_secret_version" "preview_webapp_env_vars" {

secret_id = module.demo_webapp.preview_webapp_envs_sm_id[0]

secret_string = jsonencode({

GATSBY_FIREBASE_API_KEY = "GATSBY_FIREBASE_API_KEY"

GATSBY_FIREBASE_PROJECT_ID = "GATSBY_FIREBASE_PROJECT_ID"

GATSBY_FIREBASE_AUTH_DOMAIN = "GATSBY_FIREBASE_AUTH_DOMAIN"

})

}

Result!

After applying the Terraform modules and provisioning the resources, the following URLs will be exposed:

-

Main Application CDN: https://demo.kodhive.com -

Unlimited environments with the preview CDN:- Pull Request 1 | Downy Color Preview: https://1-preview.demo.kodhive.com

- Pull Request 2 | Portage Color Preview: https://2-preview.demo.kodhive.com

- Pull Request 3 | Carnation Color Preview: https://3-preview.demo.kodhive.com

- Etc...

-

Public Media CDN: https://demo-public-media.kodhive.com -

Private Media CDN: https://demo-private-media.kodhive.com

That's all 🎉. enjoy your serverless web application, unlimited preview environments and public/private media CDNs. However, if you are not in a rush, continue the article to see how we've built it. you will not regret that.

“You take the blue pill, the story ends, you wake up in your bed and believe whatever you want to believe. You take the red pill, you stay in wonderland, and I show you how deep the rabbit hole goes.” Morpheus

What services we will use?

To ship static web applications there are a set of questions to ask first:

- Where the static application will be placed/deployed?

Just like the name implies, we want something that can just store static files and since we are serverless lovers, we

will not use NGINX server and instead we will leverage the awesome AWS S3.

- Who will be responsible for the CI/CD of the static files?

We've agreed to use S3, and we need a service that can integrate with the web application versioned in Github, build the

static files and upload them to S3. and again, since we are serverless lovers, we will not use Jenkins and instead we

will leverage AWS Codebuild/Codepipeline.

- Who will serve the static web application?

Building and storing static web applications is only the tip of the ice burg, In addition to that, we need a service that can deliver those static files to end users securely, efficiently and cost effectively.

We need a Content Delivery Network (CDN) which is serverless and accept S3 as an origin. to stay in AWS ecosystem, we

will use AWS Cloudfront.

- How the CDN domains will look like?

Cloudfront generates random and ugly domain names, so we need to create a good-looking DNS names using our custom domain

name and point them to the default generated Cloudfront domain names. To achieve this, we will leverage a DNS provider

like Route53 or Cloudflare.

- Who will certify the domain names?

To meet regulatory compliance requirements for encrypting data in transit we need our domains to be certified and

operates only on HTTPs, so we have to acquire a certificate for our domains from a Certificate Authority. In order to do

that, we will use AWS Certificate Manager.

After agreeing on the components and the services to use, let's start coding!

Certify!

Source: Terraform AWS Certify

Enabling SSL/TLS for Internet-facing sites can help improve the search rankings for our site and help us meet regulatory compliance requirements for encrypting data in transit. Hence, we need to acquire an SSL/TLS certificate for our domains using AWS Certificate Manager because:

- Public SSL/TLS certificates provisioned through AWS Certificate Manager are free.

- ACM provides managed renewal for Amazon-issued SSL/TLS certificates. This means that ACM will renew the certificate automatically if we are using DNS as validation method.

Before provisioning the certificate we need to agree on the domain names that we will certify:

-

demo.kodhive.com: the main application FQDN domain pointing to the main Cloudfront Distribution. -

*.demo.kodhive.com: the previews' alternative wildcard domain name pointing to the preview Cloudfront Distribution. we need to certify the preview domainpreview.demo.kodhive.comand the Pull Requests previews domainprNumber-preview.demo.kodhive.com. -

demo-public-media.kodhive.com: the public media domain name, pointing to the public media Cloudfront Distribution. -

demo-private-media.kodhive.com: the private media domain name, pointing to the private media Cloudfront Distribution.

We will provision a public certificate because we want to identify resources on the public Internet. and we will validate our certificate using DNS entries instead of email, to benefit from the auto-renewal feature as the email validation does not support auto-renewal.

# modules/route53/main.tf

resource "aws_acm_certificate" "_" {

domain_name = var.domain_name

subject_alternative_names = var.alternative_domain_names

validation_method = "DNS"

lifecycle {

create_before_destroy = true

}

}

We've provisioned a public certificate for the main domain and for the alternative domains (previews and medias domains)

using the aws_acm_certificate resource. and we have opted for DNS as a validation method.

After certificate creation, AWS ACM will give us the Validation Options that we will use to validate our

certificate:

# modules/route53/main.tf

resource "aws_route53_record" "_" {

for_each = {

for dvo in aws_acm_certificate._.domain_validation_options : dvo.domain_name => {

name = dvo.resource_record_name

record = dvo.resource_record_value

type = dvo.resource_record_type

}

}

ttl = 60

zone_id = var.r53_zone_id

allow_overwrite = true

name = each.value.name

type = each.value.type

records = [

each.value.record

]

}

resource "aws_acm_certificate_validation" "_" {

certificate_arn = aws_acm_certificate._.arn

validation_record_fqdns = [for record in aws_route53_record._ : record.fqdn]

}

For each validation option, we create a route53 DNS record and notify AWS ACM by creating a certificate validation resource. In case you are using Cloudflare as DNS provider, you can achieve the same with this:

# modules/cloudflare/main.tf

resource "cloudflare_record" "_" {

for_each = {

for dvo in aws_acm_certificate._.domain_validation_options : dvo.domain_name => {

name = dvo.resource_record_name

record = dvo.resource_record_value

type = dvo.resource_record_type

}

}

zone_id = var.cloudflare_zone_id

proxied = false

ttl = 1

name = each.value.name

type = each.value.type

value = each.value.record

}

resource "aws_acm_certificate_validation" "_" {

certificate_arn = aws_acm_certificate._.arn

validation_record_fqdns = [for record in cloudflare_record._ : record.hostname]

}

AWS Certificate Manager might take some time to accept the validation. and after the validation, the certificate will not be

ELIGIBLEuntil it's associated with another AWS service, in our case we will associate it with CloudFront.

Finally, we will wrap those resources in a Terraform modules and call the module like this:

# modules/route53/components.tf

module "certification" {

source = "git::https://github.com/obytes/terraform-aws-certify.git//modules/route53"

r53_zone_id = var.dns_zone_id

domain_name = var.main_fqdn

alternative_domain_names = compact([local.preview_fqdn_wildcard, var.private_media_fqdn, var.public_media_fqdn])

}

Cloudfront Distribution Policies

Source: Shared Policies

Before creating the cloudfront distributions for the main production application, the PRs preview and the public/private media, we need to prepare the shared policies that we will attach to the default cache behaviour of all four distributions.

Controlling the cache key

The cache key is a unique identifier for each object cached at CloudFront edge locations.

A Cloudfront cache hit happens when the viewer request generates the same cache key as a prior request. Cloudfront then checks if the cached key is still valid and the related object is served to the viewer from the CloudFront edge location rather than serving it from the S3 origin.

Cloudfront cache miss happens when cloudfront could not find a valid object for the cache key in the edge location, Cloudfront sends the request to the S3 origin and cache the response in the edge location.

To improve our cache hit ratio and get a better performance from the web application we will include only the

minimum necessary parts to construct the cache key. This is why we will avoid involving the cookies,

query_string, and headers in the cache key because they aren't necessary for the origin to serve the

content. and Cloudfront will use the default information to construct the cache key, which is the Domain Name and

the Path Params.

# components/shared/policies/cache_policy.tf

resource "aws_cloudfront_cache_policy" "_" {

name = "${local.prefix}-caching-policy"

comment = "Policy for caching optimized CDN"

min_ttl = 1

max_ttl = 31536000

default_ttl = 86400

parameters_in_cache_key_and_forwarded_to_origin {

enable_accept_encoding_gzip = true

enable_accept_encoding_brotli = true

cookies_config {

cookie_behavior = "none"

}

query_strings_config {

query_string_behavior = "none"

}

headers_config {

header_behavior = "none"

}

}

}

In addition to caching, we are also enabling the Gzip and Brotli compression. we have enabled both encoding

methods to support both, viewers asking for brotli encoded objects and viewers asking for gzip encoded objects.

The viewers can choose to use a specific encoding method by sending the Accept-Encoding header, If the

Accept-Encoding header is missing from the viewer request, or if It doesn't contain gzip and br as a

value, CloudFront does not compress the object in the response.

If the Accept-Encoding header includes additional values such as deflate, CloudFront removes them before

forwarding the request to the origin.

When the viewer supports both Gzip and Brotli, CloudFront prefers Brotli because it's faster.

Controlling origin requests

When a viewer request to CloudFront results in a cache miss (the requested object is not cached at the edge location), CloudFront sends a request to the origin to retrieve the object. This is called an origin request.

By default, Cloudfront includes the following information to the origin request:

-

The URL path without domain name and without query string.

-

The request body if any.

-

The default CloudFront HTTP headers (

Host,User-Agent,and X-Amz-Cf-Id)

Cloudfront gives us the ability to extend the information passed to the origin by using a Cloudfront Origin Request Policy.

# components/shared/policies/origin_policy.tf

resource "aws_cloudfront_origin_request_policy" "_" {

name = "${local.prefix}-origin-policy"

comment = "Policy for S3 origin with CORS"

cookies_config {

cookie_behavior = "none"

}

query_strings_config {

query_string_behavior = "all"

}

headers_config {

header_behavior = "whitelist"

headers {

items = [

"x-forwarded-host",

"origin",

"access-control-request-headers",

"x-forwarded-for",

"access-control-request-method",

"user-agent",

"referer"

]

}

}

}

For our use case, we will not forward the cookies to the S3 origins. However, we will forward all query string variables

and a set of HTTP headers. We are forwarding these headers because they are important if we want to add more restriction

at the S3 Origin level based on client IP (x-forwarded-for) or origin (referer) using S3 IAM Policies.

Adding Response Headers

By using cloudfront response headers policy we can configure Cloudfront to return other headers to the viewer in addition to the headers returned by the S3 origins. These headers are often used to transfer security related metadata to the viewers.

For our use case, we will leverage the policy to add CORS Config and Security Headers to the viewer

response:

-

Cross Origin Resource Sharing (CORS)- an HTTP-header based mechanism that allows a server to indicate any origins (domain, scheme, or port) other than its own from which a browser should permit loading resources. -

HTTP Security Headers- are headers used to increase the security of web applications. Once set, these HTTP response headers can restrict modern browsers from running into easily preventable vulnerabilities.

# components/shared/policies/response_headers_policies.tf

resource "aws_cloudfront_response_headers_policy" "_" {

name = "${local.prefix}-security-headers"

cors_config {

origin_override = true

access_control_max_age_sec = 86400

access_control_allow_credentials = false

access_control_allow_origins {

items = ["*"]

}

access_control_allow_headers {

items = ["*"]

}

access_control_allow_methods {

items = ["GET", "HEAD", "OPTIONS"]

}

}

security_headers_config {

strict_transport_security {

override = true

preload = true

include_subdomains = true

access_control_max_age_sec = "31536000"

}

content_security_policy {

override = true

content_security_policy = var.content_security_policy

}

frame_options {

override = true

frame_option = "SAMEORIGIN"

}

content_type_options {

override = true

}

referrer_policy {

override = true

referrer_policy = "strict-origin-when-cross-origin"

}

xss_protection {

override = true

mode_block = true

protection = true

}

}

}

For our distributions, we are allowing loading resources from all origins, and we are also accepting all headers.

However, we will allow just the read-only HTTP methods ["GET", "HEAD", "OPTIONS"] to prevent viewers from

modifying content in the S3 origins.

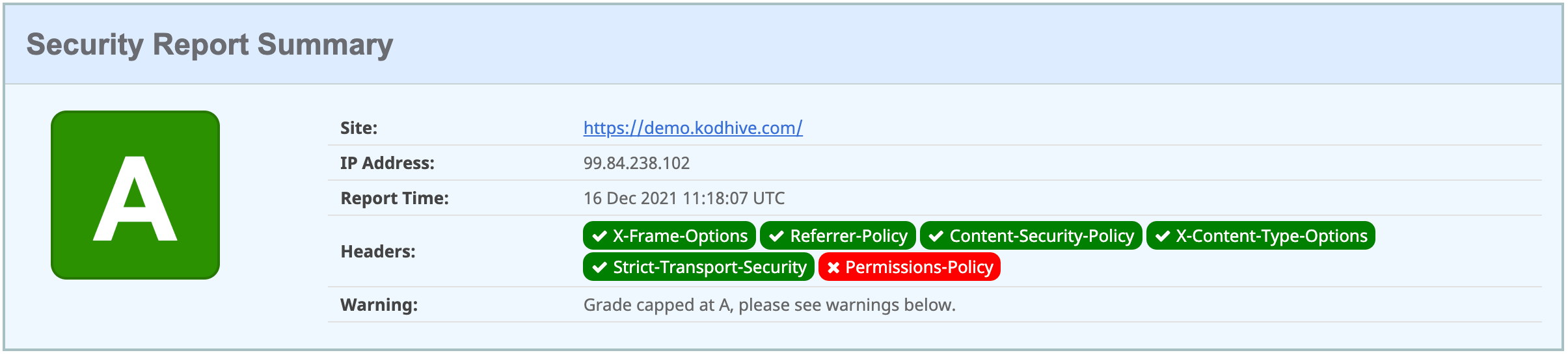

For the security headers, we've configured these headers:

-

strict_transport_security: tell the browsers that our application should only be accessed using HTTPS, instead of using HTTP. -

content_security_policy: control resources the user agent is allowed to load for a given page. by specifying the allowed server origins and script endpoints. This helps guard against cross-site scripting attacks. -

frame_options: we are blocking web browsers from rendering our application in an<frame>,<iframe>,<embed>or<object>unless the origin is owned by us, this will help us avoid clickjacking attacks.

Typically, clickjacking is performed by displaying an invisible page or HTML element, inside an iframe, on top of the page the user sees. The user believes they are clicking the visible page but in fact they are clicking an invisible element in the additional page transposed on top of it.

-

content_type_options: avoid MIME type sniffing by saying that the MIME types are deliberately configured. and should be followed and not be changed to block a request if the request destination is of type style and the MIME type is not text/css, or of type script and the MIME type is not a JavaScript MIME type. -

referrer_policy: we are controlling how much referrer information (sent with the Referer header) should be included with requests, by usingstrict-origin-when-cross-originwe instruct browsers to send the origin, path, and querystring when performing a same-origin request. For cross-origin requests send the origin (only) when the protocol security level stays same (HTTPS→HTTPS). and don't send the Referer header to less secure destinations (HTTPS→HTTP). this will help us avoid leaking user's data when referring to other websites like Facebook or Twitter. -

xss_protection: we instruct the browsers to stops pages from loading when they detect reflected cross-site scripting.

Setting the security headers above will promote our website to A Grade 😎

Finally, we will wrap the cache policy, origin request policy and the response header policy resources in a Terraform module and call the module like this:

# modules/webapp/components.tf

module "webapp_policies" {

source = "../../components/shared/policies"

prefix = local.prefix

common_tags = local.common_tags

content_security_policy = var.content_security_policy

}

Viewer Requests URL Rewrite Function

Source: Cloudfront Function

Deploying web applications to S3 and serving it via Cloudfront may seems easy. however, there are some caveats you need to know especially if you are trying to serve single page applications or statically generated websites:

Statically generated websites like the ones generated by GatsbyJS will generate index.html for each page that we

eventually upload to S3. However, when requesting pages from web browsers, we are not appending index.html to the

requested path this will result in two issues:

-

S3 will not find the requested object so it will redirect us to the root

index.htmlif we already setup the error page toindex.htmlwhich is fine. However, in the worst case scenario it will redirect us to the default error page of cloudfront which will indicate that the object is not found. -

In case we are redirected to

index.html, S3 will swallow the query strings during the redirects. -

Search engines will be confused, because we will have duplicate contents with multiple URLs. the ones with trailing slash and the ones without trailing slash. this will result in a bad SEO ranking.

In order to fix this issues we will create a Cloudfront Viewer Request Function to help us rewrite the bad URLs and also routing the preview CDN requests to the target S3 prefix.

// components/shared/url-rewrite-function/url_rewrite_function.js

function pointsToFile(uri) {

return /\/[^/]+\.[^/]+$/.test(uri);

}

function hasTrailingSlash(uri) {

return uri.endsWith('/');

}

function needsTrailingSlash(uri) {

return !pointsToFile(uri) && !hasTrailingSlash(uri);

}

function previewPRId(host) {

if (host && host.value) {

return host.value.substring(0, host.value.lastIndexOf('-preview'));

}

}

function objectToQueryString(obj) {

var str = [];

for (var param in obj)

if (obj[param].multiValue)

str.push(

param + '=' + obj[param].multiValue.map((item) => item.value).join(',')

);

else if (obj[param].value === '') str.push(param);

else str.push(param + '=' + obj[param].value);

return str.join('&');

}

function handler(event) {

console.log(JSON.stringify(event));

var request = event.request;

var host = request.headers.host;

// Extract the original URI and Query String from the request.

var original_uri = request.uri;

var qs = request.querystring;

// If needed, redirect to the same URI with trailing slash, preserving query string.

if (needsTrailingSlash(original_uri)) {

console.log(`${original_uri} needs trailing slash, redirect!`);

return {

statusCode: 302,

statusDescription: 'Moved Temporarily',

headers: {

location: {

value: Object.keys(qs).length

? `${original_uri}/?${objectToQueryString(qs)}`

: `${original_uri}/`,

},

},

};

}

// Match any '/' that occurs at the end of a URI, replace it with a default index

// Useful for single page applications or statically generated websites that are hosted in an Amazon S3 bucket.

var new_uri = original_uri.replace(/\/$/, '/index.html');

var pr_id = previewPRId(host);

if (pr_id) {

// Re-write the url to the target preview S3 Key

request.uri = `/${pr_id}${new_uri}`;

} else {

// Replace the received URI with the URI that includes the index page

request.uri = new_uri;

}

console.log(`Original URI: ${original_uri}`);

console.log(`New URI: ${new_uri}`);

return request;

}

The functions will:

-

Redirect to the same URI with trailing slash, to make sure we don't have duplicate content and all website URLs ends with a trailing slash.

-

Preserve query string variables during redirection.

-

Match any '/' that occurs at the end of a URI and replace it with a default index to support single page applications or statically generated websites.

-

Finally, if the domain name is related to the preview CDN, we extract the pull request identifier and prefix the URI with the PR identifier to route the request to the preview S3 prefix.

After preparing our function, we will provision it and publish it using the aws_cloudfront_function resource.

# components/shared/url-rewrite-function/url_rewrite_function.tf

resource "aws_cloudfront_function" "url_rewrite_viewer_request" {

name = "${local.prefix}-url-rewrite"

code = file("${path.module}/url_rewrite_function.js")

comment = "URL Rewrite for single page applications or statically generated websites"

runtime = "cloudfront-js-1.0"

publish = true

}

This is not a Lambda@Edge function, it's a Cloudfront function which has a maximum execution time of 1 millisecond instead of 5 seconds and it's deployed in 218+ CloudFront Edge Locations instead of 13 CloudFront Regional Edge Caches and it cost approximately 1/6th the price of Lambda@Edge 😎.

The Signer

Source: Signer

To restrict access to documents, business data, media streams, or content that is intended for selected users, we have to setup a Cloudfront signer that we can use to restrict users access to private content by using special CloudFront signed URLs.

First we need to create RSA key-pairs, the private key will be used to generate the pre-signed URLs and the public

one will be used to validate the pre-signed URLs integrity at the Cloudfront distribution level.

# components/shared/signer/generate_key_pairs.sh

openssl genrsa -out private_key.pem 2048

openssl rsa -pubout -in private_key.pem -out public_key.pem

Now that we have the key pairs, we will use the public key to create the Cloudfront Key Group that we will associate as a trusted key group to the private media Cloudfront distribution.

# components/shared/signer/signer.tf

resource "aws_cloudfront_public_key" "_" {

name = local.prefix

encoded_key = var.public_key

}

resource "aws_cloudfront_key_group" "_" {

name = local.prefix

items = [aws_cloudfront_public_key._.id]

}

Finally, we will wrap those resources in a Terraform module and call the module like this:

# modules/webapp/components.tf

module "webapp_private_media_signer" {

source = "../../components/shared/signer"

prefix = local.prefix

common_tags = local.common_tags

public_key = var.media_signer_public_key

}

For the private key, we will discuss later in this article how we are going to use it to sign URLs.

The Main/Preview Application CDNs

Content Delivery Network is the system component that will sit between the viewers and the S3 origin and will manage content delivery and guarantee that our content is delivered in an efficient, secure and cost effective approach. for this we will leverage Cloudfront network to manage our content delivery.

The Public S3 Origin

Before creating the cloudfront distributions, we need an S3 bucket to host the web application's static files:

# components/cdn/public/s3.tf

resource "aws_s3_bucket" "_" {

bucket = var.fqdn

acl = "public-read"

website {

index_document = local.index_document

error_document = local.error_document

}

force_destroy = true

tags = merge(

local.common_tags,

{usage = "Host web application static content delivered by s3"}

)

}

For the access control list we've chosen public-read because the S3 bucket content is just a public static web

application and anyone can access it in a read-only mode.

There are two ways that you can connect CloudFront to an S3 origin:

-

Sets up an S3 origin, allowing us to configure CloudFront to use IAM to access our bucket. Unfortunately, it also makes it impossible to perform serverside (301/302) redirects, and it also means that directory indexes will only work in the root directory.

-

Sets up the S3 bucket’s Static Website Hosting Endpoint as the CloudFront origin.

In order for all the features of our site to work correctly, we must use the S3 bucket’s Static Website Hosting Endpoint

as the CloudFront origin. This does mean that our bucket will have to be configured for public-read. Because when

CloudFront is using an S3 Static Website Hosting Endpoint address as the Origin, it’s incapable of authenticating via

IAM.

If the S3 bucket is intended to be used as the preview S3 bucket we should create the default index document and the error document, because the previews will be uploaded to s3 prefixes and not to S3 bucket root:

Index document: will be served when the viewer access the preview domain without providing the pull request identifier (i.e. https://preview.demo.kodhive.com). we will send a welcoming message indicating that this is the correct preview CDN.

# components/cdn/public/root_objects.tf

resource "aws_s3_bucket_object" "index_document" {

count = var.is_preview ? 1:0

key = local.index_document

acl = "public-read"

bucket = aws_s3_bucket._.id

content = "Welcome to the preview CDN, use https://[pull_request_number]-${var.fqdn} to access a PR preview."

content_type = "text/html"

}

Error document: will be served when the viewer wants to access a preview that does not exist in the S3 bucket (i.e. https://4040404040404040404040404-preview.demo.kodhive.com)

# components/cdn/public/root_objects.tf

resource "aws_s3_bucket_object" "error_document" {

count = var.is_preview ? 1:0

key = local.error_document

acl = "public-read"

bucket = aws_s3_bucket._.id

content = "Found no preview for this pull request!"

content_type = "text/html"

}

The Cloudfront Distributions

We will provision cloudFront distributions to tell CloudFront where we want content to be delivered from, and the details about how to track and manage content delivery.

We will associate the ACM certificate with the distributions, and we will also associate the already created policies and the URLs rewrite function with the default cache behaviour.

# components/cdn/public/cloudfront.tf

resource "aws_cloudfront_distribution" "_" {

#######################

# General

#######################

enabled = true

is_ipv6_enabled = true

wait_for_deployment = true

http_version = "http2"

comment = var.comment

default_root_object = local.index_document

price_class = var.price_class

aliases = length(var.domain_aliases) > 0 ? var.domain_aliases : [var.fqdn]

#######################

# WEB Origin config

#######################

origin {

domain_name = aws_s3_bucket._.website_endpoint

origin_id = local.web_origin_id

custom_origin_config {

http_port = 80

https_port = 443

origin_protocol_policy = "http-only"

origin_ssl_protocols = [

"TLSv1",

"TLSv1.1",

"TLSv1.2",

]

}

}

#########################

# Certificate configuration

#########################

viewer_certificate {

acm_certificate_arn = var.cert_arn

ssl_support_method = "sni-only"

minimum_protocol_version = "TLSv1.2_2021"

cloudfront_default_certificate = false

}

#######################

# Logging configuration

#######################

dynamic "logging_config" {

for_each = var.s3_logging != null ? [1] : []

content {

bucket = var.s3_logging.address

prefix = "cloudfront"

include_cookies = false

}

}

#######################

# WEB Caching configuration

#######################

default_cache_behavior {

target_origin_id = local.web_origin_id

allowed_methods = [ "GET", "HEAD", "OPTIONS", ]

cached_methods = [ "GET", "HEAD", "OPTIONS", ]

compress = true

cache_policy_id = var.cache_policy_id

origin_request_policy_id = var.origin_request_policy_id

response_headers_policy_id = var.response_headers_policy_id

viewer_protocol_policy = "redirect-to-https"

dynamic "function_association" {

for_each = var.url_rewrite_function_arn != null ? [1] : []

content {

event_type = "viewer-request"

function_arn = var.url_rewrite_function_arn

}

}

}

#######################

# Restrictions

#######################

restrictions {

geo_restriction {

restriction_type = "none"

}

}

tags = local.common_tags

}

Notice that we have used the

website_endpointas the origin domain name.

Finally, we will wrap those resources in a Terraform module and call the module to create the main application CDN and the Pull Requests preview CDN:

- Main application CDN:

module "webapp_main_cdn" {

count = var.enable.main ? 1:0

source = "../../components/cdn/public"

common_tags = local.common_tags

# DNS

fqdn = var.main_fqdn

cert_arn = var.acm_cert_arn

# Cloudfront

comment = "Main | ${var.comment}"

cache_policy_id = module.webapp_policies.cache_policy_id

origin_request_policy_id = module.webapp_policies.origin_request_policy_id

response_headers_policy_id = module.webapp_policies.response_headers_policy_id

is_preview = false

url_rewrite_function_arn = module.url_rewrite_function.arn

}

The domain aliases that will be allowed by the main cloudfront distribution is var.main_fqdn which is

demo.kodhive.com in our case.

The preview S3 bucket will be named demo.kodhive.com, and we have disabled the preview by setting is_preview

to false to not create the index and error documents. Because the root S3 bucket will contain the web application

index document, and we shouldn't override that.

- Pull Requests preview CDN:

module "webapp_pr_preview_cdn" {

count = var.enable.preview ? 1:0

source = "../../components/cdn/public"

common_tags = local.common_tags

# DNS

fqdn = local.preview_fqdn_base

cert_arn = var.acm_cert_arn

domain_aliases = [ local.preview_fqdn_base, local.preview_fqdn_wildcard ]

# Cloudfront

comment = "Preview | ${var.comment}"

cache_policy_id = module.webapp_policies.cache_policy_id

origin_request_policy_id = module.webapp_policies.origin_request_policy_id

response_headers_policy_id = module.webapp_policies.response_headers_policy_id

is_preview = true

url_rewrite_function_arn = module.url_rewrite_function.arn

}

The domain aliases that will be allowed by the preview cloudfront distribution are local.preview_fqdn_base and

local.preview_fqdn_wildcard which are respectively preview.demo.kodhive.com and

*-preview.demo.kodhive.com in our case.

The preview S3 bucket will be named preview.demo.kodhive.com, and we have enabled the preview by setting

is_preview to true to create the index and error documents for the preview requests.

The Private/Public Media CDNs

In addition to the main web application CDN and Pull Requests preview CDN, we want to provision media CDNs that will help us store and serve public website media and users specific private media.

The Private S3 Origin

In the previous section we've used a public S3 bucket, but this time we will use a private S3 bucket because:

-

For public media: the content is not a web application and it's just media files that does not need an S3 Website Endpoint.

-

For private media: we want to serve user's private media, so the bucket needs to be private and only cloudfront can access its content through IAM policies.

# components/cdn/private/s3.tf

resource "aws_s3_bucket" "_" {

bucket = var.fqdn

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

versioning {

enabled = true

}

tags = local.common_tags

force_destroy = true

}

We've also enabled versioning and enabled the managed AES256 server side encryption as the bucket default

encryption so any content will be automatically encrypted using the S3 managed KMS key.

We've chosen

AES256instead ofKMSbecause cloudfront does not support generating pre-signed URLs for content encrypted with customer managed KMS keys.

As we pointed earlier, we should allow cloudfront to get content from the S3 bucket. for this, we will need to create a special CloudFront user called an Origin Access Identity (OAI), whitelist the identity to access the S3 bucket and associate the identity with the cloudfront distribution.

# components/cdn/private/cloudfront.tf

resource "aws_cloudfront_origin_access_identity" "_" {

comment = local.prefix

}

After that, we create an IAM policy allowing Cloudfront Origin Access Identity to call "s3:ListBucket" and

"s3:ListBucket" actions and attach the policy to the S3 bucket.

# components/cdn/private/iam.tf

data "aws_iam_policy_document" "_" {

statement {

actions = [

"s3:ListBucket",

"s3:GetObject",

]

resources = [

aws_s3_bucket._.arn,

"${aws_s3_bucket._.arn}/*",

]

principals {

type = "AWS"

identifiers = [

aws_cloudfront_origin_access_identity._.iam_arn,

]

}

}

}

resource "aws_s3_bucket_policy" "_" {

bucket = aws_s3_bucket._.id

policy = data.aws_iam_policy_document._.json

}

Additionally, we create a public access block to block public ACLs and public policies:

# components/cdn/private/access_block.tf

resource "aws_s3_bucket_public_access_block" "_" {

bucket = aws_s3_bucket._.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

The Cloudfront Distributions

Almost the same configuration as the previous distribution, the only differences are:

-

The usage of the S3 bucket endpoint instead of S3 website endpoint because the bucket will contain media files.

-

Since the bucket is private, we have attached a cloudfront origin access identity to give the distribution access to the S3 bucket.

-

For private media we've added the

trusted_key_groupsattribute to restrict access to only requests with a pre-signed URLs.

resource "aws_cloudfront_distribution" "_" {

#######################

# General

#######################

enabled = true

is_ipv6_enabled = true

wait_for_deployment = true

comment = var.comment

default_root_object = var.default_root_object

price_class = var.price_class

aliases = length(var.domain_aliases) > 0 ? var.domain_aliases : [var.fqdn]

#########################

# S3 Origin configuration

#########################

origin {

domain_name = "${aws_s3_bucket._.id}.s3.amazonaws.com"

origin_id = local.media_origin_id

s3_origin_config {

origin_access_identity = aws_cloudfront_origin_access_identity._.cloudfront_access_identity_path

}

}

#########################

# Certificate configuration

#########################

viewer_certificate {

acm_certificate_arn = var.cert_arn

ssl_support_method = "sni-only"

minimum_protocol_version = "TLSv1.2_2021"

cloudfront_default_certificate = false

}

#######################

# Logging configuration

#######################

dynamic "logging_config" {

for_each = var.s3_logging != null ? [1] : []

content {

bucket = var.s3_logging.address

prefix = "cloudfront"

include_cookies = false

}

}

#######################

# WEB Caching configuration

#######################

default_cache_behavior {

target_origin_id = local.media_origin_id

allowed_methods = [ "GET", "HEAD", "OPTIONS", ]

cached_methods = [ "GET", "HEAD", "OPTIONS", ]

compress = true

cache_policy_id = var.cache_policy_id

origin_request_policy_id = var.origin_request_policy_id

response_headers_policy_id = var.response_headers_policy_id

viewer_protocol_policy = "redirect-to-https"

trusted_key_groups = length(var.trusted_key_groups) > 0 ? var.trusted_key_groups : null

}

#######################

# Restrictions

#######################

restrictions {

geo_restriction {

restriction_type = "none"

}

}

tags = local.common_tags

}

Finally, we will wrap those resources in a Terraform module and call the module to create the public media CDN and the private media CDN:

- Private Media CDN:

# modules/webapp/components.tf

module "webapp_private_media_cdn" {

count = var.enable.private_media && length(var.media_signer_public_key) > 0 ? 1:0

source = "../../components/cdn/private"

prefix = "${local.prefix}-private-media"

common_tags = local.common_tags

# DNS

fqdn = var.private_media_fqdn

cert_arn = var.acm_cert_arn

# Cloudfront

comment = "Private Media | ${var.comment}"

cache_policy_id = module.webapp_policies.cache_policy_id

origin_request_policy_id = module.webapp_policies.origin_request_policy_id

response_headers_policy_id = module.webapp_policies.response_headers_policy_id

trusted_key_groups = [module.webapp_private_media_signer.cloudfront_key_group_id]

}

The domain alias that will be allowed by the public cloudfront distribution is var.private_media_fqdn which is

demo-private-media.kodhive.com in our case.

The private media S3 bucket will be named demo-private-media.kodhive.com, and we have specified the

trusted_key_groups attribute to only allow pre-signed URLs generated using the private keys belonging to those key

groups.

- Public Media CDN:

# modules/webapp/components.tf

module "webapp_public_media_cdn" {

count = var.enable.public_media ? 1:0

source = "../../components/cdn/private"

prefix = "${local.prefix}-public-media"

common_tags = local.common_tags

# DNS

fqdn = var.public_media_fqdn

cert_arn = var.acm_cert_arn

# Cloudfront

comment = "Public Media | ${var.comment}"

cache_policy_id = module.webapp_policies.cache_policy_id

origin_request_policy_id = module.webapp_policies.origin_request_policy_id

response_headers_policy_id = module.webapp_policies.response_headers_policy_id

}

The domain alias that will be allowed by the public cloudfront distribution is var.public_media_fqdn which is

demo-public-media.kodhive.com in our case.

The public media S3 bucket will be named demo-public-media.kodhive.com, we also didn't pass the

trusted_key_groups because this is a public media CDN and everyone should have access to it without pre-signed

URLs.

To create all the 4 distributions we will use the webapp module:

# modules/route53/components.tf

module "webapp" {

source = "../../modules/webapp"

prefix = var.prefix

common_tags = local.common_tags

enable = var.enable

comment = var.comment

acm_cert_arn = module.certification.cert_arn

main_fqdn = var.main_fqdn

private_media_fqdn = var.private_media_fqdn

public_media_fqdn = var.public_media_fqdn

media_signer_public_key = var.media_signer_public_key

content_security_policy = var.content_security_policy

# Artifacts

s3_artifacts = var.s3_artifacts

# Github

github = var.github

pre_release = var.pre_release

github_repository = var.github_repository

# Build

app_base_dir = var.app_base_dir

app_build_dir = var.app_build_dir

app_node_version = var.app_node_version

app_install_cmd = var.app_install_cmd

app_build_cmd = var.app_build_cmd

# Notification

ci_notifications_slack_channels = var.ci_notifications_slack_channels

}

DNS Records

We've created the 4 cloudfront distributions and instead of using the random ugly domain names generated by Cloudfront we will expose our CDNs with a good-looking domain names.

The custom domain names should match the ones provided as aliases to the cloudfront distributions, because Cloudfront will block all requests coming from a different domain name.

# modules/route53/components.tf

resource "aws_route53_record" "main_dns_record" {

count = length(module.webapp.main_cdn_dist)

zone_id = var.dns_zone_id

name = var.main_fqdn

type = "A"

alias {

name = module.webapp.main_cdn_dist[count.index]["domain_name"]

zone_id = module.webapp.main_cdn_dist[count.index]["zone_id"]

evaluate_target_health = true

}

}

resource "aws_route53_record" "preview_dns_record" {

count = length(module.webapp.preview_cdn_dist)

zone_id = var.dns_zone_id

name = local.preview_fqdn_wildcard

type = "A"

alias {

name = module.webapp.preview_cdn_dist[count.index]["domain_name"]

zone_id = module.webapp.preview_cdn_dist[count.index]["zone_id"]

evaluate_target_health = true

}

}

resource "aws_route53_record" "private_media_dns_record" {

count = length(module.webapp.private_media_cdn_dist)

zone_id = var.dns_zone_id

name = var.private_media_fqdn

type = "A"

alias {

name = module.webapp.private_media_cdn_dist[count.index]["domain_name"]

zone_id = module.webapp.private_media_cdn_dist[count.index]["zone_id"]

evaluate_target_health = true

}

}

resource "aws_route53_record" "public_media_dns_record" {

count = length(module.webapp.public_media_cdn_dist)

zone_id = var.dns_zone_id

name = var.public_media_fqdn

type = "A"

alias {

name = module.webapp.public_media_cdn_dist[count.index]["domain_name"]

zone_id = module.webapp.public_media_cdn_dist[count.index]["zone_id"]

evaluate_target_health = true

}

}

In case you are using the Cloudflare as DNS provider, you can check/use the Cloudflare module in modules/route53/components.tf

Generating Pre-Signed URLs

After preparing the RSA key pairs, the Cloudfront Key Group and the private media CDN. we will see how we can generate pre-signed URLs using:

- The private key.

- The cloudfront public key id.

- The private media bucket name.

- The target filename to generate the pre-signed URL for it.

So, we will use the private key to generate a pre-signed URL for the working.gif media file in the

demo-private-media.kodhive.com bucket which should be valid for 1 year (31556952 seconds).

# components/shared/signer/generate_presigned_url.py

import rsa

from datetime import datetime, timedelta

from botocore.signers import CloudFrontSigner

CLOUDFRONT_PUBLIC_KEY_ID = "Cloudfront public key ID"

PRIVATE_KEY = """

-----BEGIN RSA PRIVATE KEY-----

PRIVATE KEY: Store it in a safe place!

-----END RSA PRIVATE KEY-----

"""

def rsa_signer(message):

"""

RSA Signer Factory

:param message: Message to be signed using CloudFront Private Key

:return: Signed message

"""

return rsa.sign(

message,

rsa.PrivateKey.load_pkcs1(PRIVATE_KEY.encode("utf8")),

"SHA-1",

)

def generate_cloudfront_presigned_url(

file_name: str,

bucket_name: str,

expires_in: int

):

"""

Generate a CloudFront pre-signed URL using a canned policy

:param file_name: Full path of the file inside the bucket

:param bucket_name: bucket name

:param expires_in: How many seconds the pre-signed url will be valid

:return: Cloudfront pre-signed URL

"""

url = f"https://{bucket_name}/{file_name}"

expire_date = datetime.utcnow() + timedelta(seconds=expires_in)

cf_signer = CloudFrontSigner(CLOUDFRONT_PUBLIC_KEY_ID, rsa_signer)

return cf_signer.generate_presigned_url(url, date_less_than=expire_date)

print(generate_cloudfront_presigned_url(

"working.gif",

"demo-private-media.kodhive.com",

31556952

))

The generated pre-signed URL is using a canned policy and not custom policy, which means the url will look like this:

For canned policy URLs, in addition to the Base URL of the file, the URL contains these query params:

-

Expires: the expiration date. -

Signature: the generated signature which is the hashed and signed version of the canned policy statement. -

Key-Pair-Id: the key pair id used to generate the signature

For signed URLs that use a canned policy, we don't include the policy statement in the URL, as we do for signed URLs

that use a custom policy. In the background this is the policy that cf_signer.generate_presigned_url will generate

the signature for it.

{

"Statement": [

{

"Resource": "https://demo-private-media.kodhive.com/working.gif",

"Condition": {

"DateLessThan": {

"AWS:EpochTime": 31556952

}

}

}

]

}

Application Continuous Integration

Source: Terraform AWS Certify

Preview feature branches

Reading process.env is the standard way to retrieve environment variables. The dotenv package used by

ReactJS, GatsbyJS and other generators let us take what is in our .env file and put it in process.env.

To support .env files in a fancy and dynamic way without passing the env variables as Terraform variables. We need

to make the build runtime able to generate that file from a dynamic remote location. For that, we will create a secrets

manager secret and give developers access to populate that secret with environment variables, and then codebuild can

pull the secret and populate the .env file with JSON values from the secret. so let's create that secret:

# modules/webapp-preview/envs.tf

resource "aws_secretsmanager_secret" "_" {

name = local.prefix

recovery_window_in_days = 0

}

As we agreed previously, we will leverage codebuild to build and deploy the web application static files. First, we need to create a codebuild project.

In the source stage, we will connect codebuild with our github repository and specify the build specification location. we don't need artifacts when we hook the codebuild project directly to Github. and for the cache we will use S3 Remote cache.

# modules/webapp-preview/codebuild.tf

resource "aws_codebuild_project" "_" {

name = local.prefix

description = "Build and deploy feature branch of ${var.repository_name}"

build_timeout = var.build_timeout

service_role = aws_iam_role._.arn

source {

type = "GITHUB"

location = "https://github.com/${var.github.owner}/${var.repository_name}.git"

buildspec = file("${path.module}/buildspec.yml")

}

artifacts {

type = "NO_ARTIFACTS"

}

cache {

type = "S3"

location = "${var.s3_artifacts.bucket}/cache/${local.prefix}"

}

environment {

compute_type = var.compute_type

image = var.image

type = var.type

privileged_mode = var.privileged_mode

# VCS

# ---

environment_variable {

name = "VCS_OWNER"

value = var.github.owner

}

environment_variable {

name = "VCS_TOKEN"

value = var.github.token

}

environment_variable {

name = "VCS_REPO_NAME"

value = var.repository_name

}

# Install

# -------

environment_variable {

name = "APP_NODE_VERSION"

value = var.app_node_version

}

environment_variable {

name = "APP_INSTALL_CMD"

value = var.app_install_cmd

}

# Parametrize

# -------

environment_variable {

name = "APP_PARAMETERS_ID"

value = aws_secretsmanager_secret._.id

}

# Build & Deploy

# --------------

environment_variable {

name = "APP_BASE_DIR"

value = var.app_base_dir

}

environment_variable {

name = "APP_BUILD_CMD"

value = var.app_build_cmd

}

environment_variable {

name = "APP_BUILD_DIR"

value = var.app_build_dir

}

environment_variable {

name = "APP_S3_WEB_BUCKET"

value = var.s3_web.bucket

}

environment_variable {

name = "APP_DISTRIBUTION_ID"

value = var.cloudfront_distribution_id

}

environment_variable {

name = "APP_PREVIEW_BASE_FQDN"

value = var.app_preview_base_fqdn

}

}

tags = local.common_tags

# To prevent missing permissions during first build

depends_on = [time_sleep.wait_30_seconds]

}

In order for our codebuild project to catch the feature branches pull request changes, we will create a codebuild webhook on the target repository. the webhook will trigger a codebuild build for these two events:

-

PULL_REQUEST_CREATED: when the pull request is opened for a feature branch. -

PULL_REQUEST_UPDATED: when a contributor pushes commits to the feature branch while the pull request still open.

# modules/webapp-preview/webhook.tf

resource "aws_codebuild_webhook" "_" {

project_name = aws_codebuild_project._.name

filter_group {

filter {

type = "EVENT"

pattern = "PULL_REQUEST_CREATED, PULL_REQUEST_UPDATED"

}

}

}

On every webhook, codebuild will run the steps in these steps:

- Change the shell language to

bash - Install the nodejs version specified with the environment variable

APP_NODE_VERSION - Change the base directory in case the repository is a mono repo.

- Extract the

node_modulescache if any. - Parse the pull request number from the webhook event.

- Install the web application dependencies using the

APP_INSTALL_CMDenvironment variable, installation will be skipped ifpackage.jsonfile did not change and there is anode_modulescache. - Pull the environment variables from secrets manager and populate the

.envfile. - Build the application static files using

APP_BUILD_CMDenvironment variable. - Upload the static files to the public S3 bucket under the

VCS_PR_NUMBERS3 prefix. so cloudfront URL Rewrite function can route requests with a preview sub-domain and a PR number to the target pull request application. - Invalidate all cloudfront edge cache, so viewers can see the new application with the new changes.

- Notify the preview build completion and the preview URL to Github users by commenting on the target Pull Request that triggered the Build.

- Zip the

node_modulesfolder and cache it on S3.

# modules/webapp-preview/buildspec.yml

version: 0.2

env:

shell: bash

phases:

install:

runtime-versions:

nodejs: ${APP_NODE_VERSION}

commands:

# Change to base dir

- cd ${APP_BASE_DIR}

# Extract downloaded node_modules cache if any

- |

if [ -f "node_modules.tar.gz" ]; then

echo Unzipping node_modules cache

tar -zxf node_modules.tar.gz && echo "extract cached deps succeeded" || echo "extract cached deps failed"

fi

# VCS Ops

- VCS_PR_NUMBER=$(echo $CODEBUILD_WEBHOOK_TRIGGER | sed 's/pr\///g')

- echo "${CODEBUILD_WEBHOOK_EVENT} triggered for pull request ${VCS_PR_NUMBER}"

# Install dependencies

- echo Installing app dependencies ...

- ${APP_INSTALL_CMD}

pre_build:

commands:

# Pull parameters and save to .env

- |

echo Pulling parameters and exporting to .env file...

aws secretsmanager get-secret-value --secret-id ${APP_PARAMETERS_ID} --query SecretString --output text \

| jq -r 'to_entries|map("\(.key)=\(.value|tostring)")|.[]' > .env

build:

commands:

# Build, deploy and invalidate web cache

- echo Building static site...

- ${APP_BUILD_CMD}

- aws s3 sync ${APP_BUILD_DIR}/. s3://${APP_S3_WEB_BUCKET}/${VCS_PR_NUMBER} --delete --acl public-read --only-show-errors

- aws cloudfront create-invalidation --distribution-id ${APP_DISTRIBUTION_ID} --paths '/*'

# Notify deploy preview ready

- |

if [[ $CODEBUILD_WEBHOOK_EVENT == "PULL_REQUEST_CREATED" ]]; then

APP_PREVIEW_URL="https://${VCS_PR_NUMBER}-${APP_PREVIEW_BASE_FQDN}"

APP_PREVIEW_COMMENT="✔️ Deploy Preview is ready! <br /><br />😎 Browse the preview: ${APP_PREVIEW_URL}"

VCS_PR_URL="https://api.github.com/repos/${VCS_OWNER}/${VCS_REPO_NAME}/issues/${VCS_PR_NUMBER}/comments"

curl -s -S -f -o /dev/null ${VCS_PR_URL} -H "Authorization: token ${VCS_TOKEN}" -d '{"body": "'"$APP_PREVIEW_COMMENT"'"}'

fi

post_build:

commands:

# Save node_modules cache

- tar -zcf node_modules.tar.gz node_modules && echo "compress deps succeeded" || echo "compress deps failed"

cache:

paths:

- 'node_modules.tar.gz'

Hooyah! it's working 🎉

Release the application

For releasing the application we need to place our codebuild project in a pipeline for many reasons:

-

To guarantee only one build is running at a time and avoid two builds overriding each other's content during S3 uploads. Unlike the preview bucket, the main S3 will have just one application in the bucket root.

-

To benefit from code pipeline Slack notification.

For the release codebuild project, we changed the source from Github to Codepipeline, because the application source

code will be cloned by the codepipeline project and not Codebuild, we also changed the artifacts to CODEPIPELINE

because that's how codepipeline will pass the application source code down to codebuild.

# modules/webapp-release/build/codebuild.tf

resource "aws_codebuild_project" "_" {

name = local.prefix

description = "Build ${var.github_repository.branch} of ${var.github_repository.name} and deploy"

build_timeout = var.build_timeout

service_role = aws_iam_role._.arn

source {

type = "CODEPIPELINE"

buildspec = file("${path.module}/buildspec.yml")

}

artifacts {

type = "CODEPIPELINE"

}

.

.

.

}

This time, the release build specification file will be simplified because we will not deal with preview branches and all other steps will remain the same.

version: 0.2

phases:

install:

runtime-versions:

nodejs: ${APP_NODE_VERSION}

commands:

# Change to base dir

- cd ${APP_BASE_DIR}

# Extract downloaded node_modules cache if any

- |

if [ -f "node_modules.tar.gz" ]; then

echo Unzipping node_modules cache

tar -zxf node_modules.tar.gz && echo "extract cached deps succeeded" || echo "extract cached deps failed"

fi

# Install dependencies

- echo Installing app dependencies ...

- ${APP_INSTALL_CMD}

pre_build:

commands:

# Pull parameters and save to .env

- echo Pulling parameters and exporting to .env file...

- PARAMS=$(aws secretsmanager get-secret-value --secret-id ${APP_PARAMETERS_ID} --query SecretString --output text)

- echo $PARAMS | jq -r 'to_entries|map("\(.key)=\(.value|tostring)")|.[]' > .env

build:

commands:

# Build, deploy and invalidate web cache

- echo Building static site...

- ${APP_BUILD_CMD}

- aws s3 sync ${APP_BUILD_DIR}/. s3://${APP_S3_WEB_BUCKET} --delete --acl public-read --only-show-errors

- aws cloudfront create-invalidation --distribution-id ${APP_DISTRIBUTION_ID} --paths '/*'

post_build:

commands:

# Save node_modules cache

- tar -zcf node_modules.tar.gz node_modules && echo "compress deps succeeded" || echo "compress deps failed"

cache:

paths:

- 'node_modules.tar.gz'

After creating the codebuild project, we will hooke it to the codepipeline project and connect the codepipeline project with the target github repository.

# modules/webapp-release/pipeline/codepipeline.tf

resource "aws_codepipeline" "_" {

name = local.prefix

role_arn = aws_iam_role._.arn

##########################

# Artifact Store S3 Bucket

##########################

artifact_store {

location = var.s3_artifacts.bucket

type = "S3"

}

#########################

# Pull source from Github

#########################

stage {

name = "Source"

action {

name = "Source"

category = "Source"

owner = "AWS"

provider = "CodeStarSourceConnection"

version = "1"

output_artifacts = ["code"]

configuration = {

FullRepositoryId = "${var.github.owner}/${var.github_repository.name}"

BranchName = var.github_repository.branch

DetectChanges = var.pre_release

ConnectionArn = var.github.connection_arn

OutputArtifactFormat = "CODEBUILD_CLONE_REF"

}

}

}

#########################

# Build & Deploy to S3

#########################

stage {

name = "Build"

action {

name = "Build"

category = "Build"

owner = "AWS"

provider = "CodeBuild"

input_artifacts = ["code"]

output_artifacts = ["package"]

version = "1"

configuration = {

ProjectName = var.codebuild_project_name

}

}

}

}

To support QA and Production releases, we are introducing a pre_release boolean Terraform variable:

-

If it is set to

True, codepipeline will be set to detect changes in the target branch, and whenever a commit is merged to the target branch (i.e.master) codepipeline will be triggered. -

If it is set to

False, codepipeline will not detect changes in the target branch. Instead, we will create a github webhook to trigger the pipeline whenever a Github Release got published.

# modules/webapp-release/pipeline/webhook.tf

# Webhooks (Only for github releases)

resource "aws_codepipeline_webhook" "_" {

count = var.pre_release ? 0:1

name = local.prefix

authentication = "GITHUB_HMAC"

target_action = "Source"

target_pipeline = aws_codepipeline._.name

authentication_configuration {

secret_token = var.github.webhook_secret

}

filter {

json_path = "$.action"

match_equals = "published"

}

}

resource "github_repository_webhook" "_" {

count = var.pre_release ? 0:1

repository = var.github_repository.name

configuration {

url = aws_codepipeline_webhook._.0.url

secret = var.github.webhook_secret

content_type = "json"

insecure_ssl = true

}

events = [ "release" ]

}

Finally, we create the Slack notification rules:

# modules/webapp-release/pipeline/notification.tf

resource "aws_codestarnotifications_notification_rule" "notify_info" {

name = "${local.prefix}-info"

resource = aws_codepipeline._.arn

detail_type = "FULL"

status = "ENABLED"

event_type_ids = [

"codepipeline-pipeline-pipeline-execution-started",

"codepipeline-pipeline-pipeline-execution-succeeded"

]

target {

type = "AWSChatbotSlack"

address = "${local.chatbot}/${var.ci_notifications_slack_channels.info}"

}

}

resource "aws_codestarnotifications_notification_rule" "notify_alert" {

name = "${local.prefix}-alert"

resource = aws_codepipeline._.arn

detail_type = "FULL"

status = "ENABLED"

event_type_ids = [

"codepipeline-pipeline-pipeline-execution-failed",

]

target {

type = "AWSChatbotSlack"

address = "${local.chatbot}/${var.ci_notifications_slack_channels.alert}"

}

}

What's Next?

During these series of articles, we've discussed:

- Serverless Architecture and Components Integration Patterns

- Terraform and AWS Lambda External CI

- Deploy HTTP API to AWS Lambda and Expose it via API Gateway

- Realtime Interactive and Secure Applications with AWS Websocket API Gateway

- Preview and Ship Static Web Applications and Private Media with S3 and Cloudfront

In the next article, we will start leveraging the modules we've prepared to build a real world application, I'm thinking of an application where we can take advantage from all the modules. something like a Serverless Realtime Chat Application. I'm still not sure what to build but I'm sure it will be something awesome.