This is the first article of a series of articles about monitoring.

-> Second article: APM using Datadog

-> Third article: Monitoring CodePipeline deployments

-> Fourth article: Monitoring external services status using RSS and Slack

Alarm and monitoring systems are a key part of mature products and applications. We are going to learn about some key metrics we must monitor on practically every application. This article will be focused on AWS CloudWatch metrics. We will alert to Slack based on these metrics values and we will use Terraform to manage them as IaC (infrastructure as code) and make them reusable.

AWS CloudWatch key metrics

Amazon CloudWatch is a monitoring and observability service provided by AWS. It's one of the easiest and better ways to collect data on AWS. In this article, we are going to focus on the following CloudWatch metrics.

- Application Load Balancer (ALB): 5XX errors, latency, rejected connections and unhealthy hosts.

- Relational Database Service (RDS): free storage space.

There are some metrics for other services you should also monitor if you are using them.

- Simple Queue Service (SQS): age of the oldest message in the jobs queue and length of the dead-letter queue (DLQ).

- Elastic Block Storage (EBS): volume status, volume queue length and volume idle time.

- Elastic Compute Cloud (EC2): status checks.

- Elasticache: cache hits and misses and evictions.

- Lambda: duration, errors, throttles.

Alerting to Slack

** Update (20/07/2020): ** We are now using AWS Chatbot for sending notifications to Slack, check out the article we wrote about this setup and skip to the CloudWatch Alarms section.

We recommend you follow along with the source code for this article.

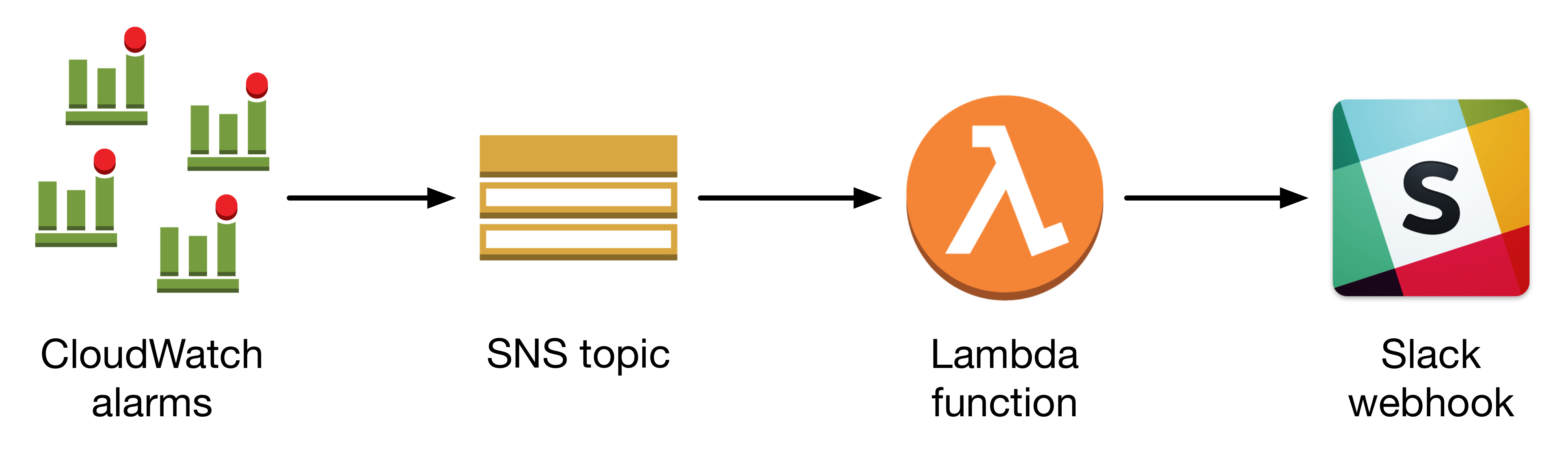

We are going to leverage CloudWatch Alarms to alert to Slack when a metric surpass a determined threshold. Besides this, we will need a Lambda function and an SNS topic to send messages to Slack.

SNS topic

First of all, we need to create an SNS topic. We will configure our CloudWatch Alarms to notify this topic when an alarm is raised. Next, we have to create a subscription for this topic. This subscription will execute the Lambda function that parses the message data and post a message to Slack.

Lambda function

We need a Lambda function to send messages to Slack. Our Lambda function parses CloudWatch Alarms event messages and extract fields to show a message like the one shown below. It can also be used to send other messages to Slack than CloudWatch Alarms.

You'll need to create a Slack webhook and set it as an ENV variable for the Lambda function.

Cloudwatch Alarms

Now we have an SNS topic and a Lambda function to post messages to Slack, we will create alerts for the above metrics.

* Metric namespace: AWS/ApplicationELB

* Metric name: HTTPCode_ELB_5XX_Count

* Metric dimension: LoadBalancer

* Metric period: 1 minute

* Number of periods: 5

* Statistic: Sum

* Alarm condition: > 1

* Metric namespace: AWS/ApplicationELB

* Metric name: HTTPCode_Target_5XX_Count

* Metric dimension: LoadBalancer

* Metric period: 1 minute

* Number of periods: 5

* Statistic: Sum

* Alarm condition: > 1

* Metric namespace: AWS/ApplicationELB

* Metric name: TargetResponseTime

* Metric dimension: LoadBalancer

* Metric period: 1 minute

* Number of periods: 5

* Statistic: Average

* Alarm condition: > 0.2 seconds

* Metric namespace: AWS/ApplicationELB

* Metric name: RejectedConnectionCount

* Metric dimension: LoadBalancer

* Metric period: 1 minute

* Number of periods: 5

* Statistic: Sum

* Alarm condition: > 1

* Metric namespace: AWS/ApplicationELB

* Metric name: UnHealthyHostCount

* Metric dimension: LoadBalancer

* Metric period: 1 minute

* Number of periods: 2

* Statistic: Average

* Alarm condition: > 0

* Metric namespace: AWS/RDS

* Metric name: FreeStorageSpace

* Metric dimension: DBInstanceIdentifier

* Metric period: 1 minute

* Number of periods: 5 or 1 out of 5

* Statistic: Minimum

* Alarm condition: < 1000000000 Bytes

With these alarms, you'll have visibility if something goes wrong in your application.

Thanks for reading!

This was everything for the first article of a series of articles about monitoring. In the next ones, we will learn how to monitor CodePipeline deployments, APM and external services status. Stay tuned!