What is DevOps ?

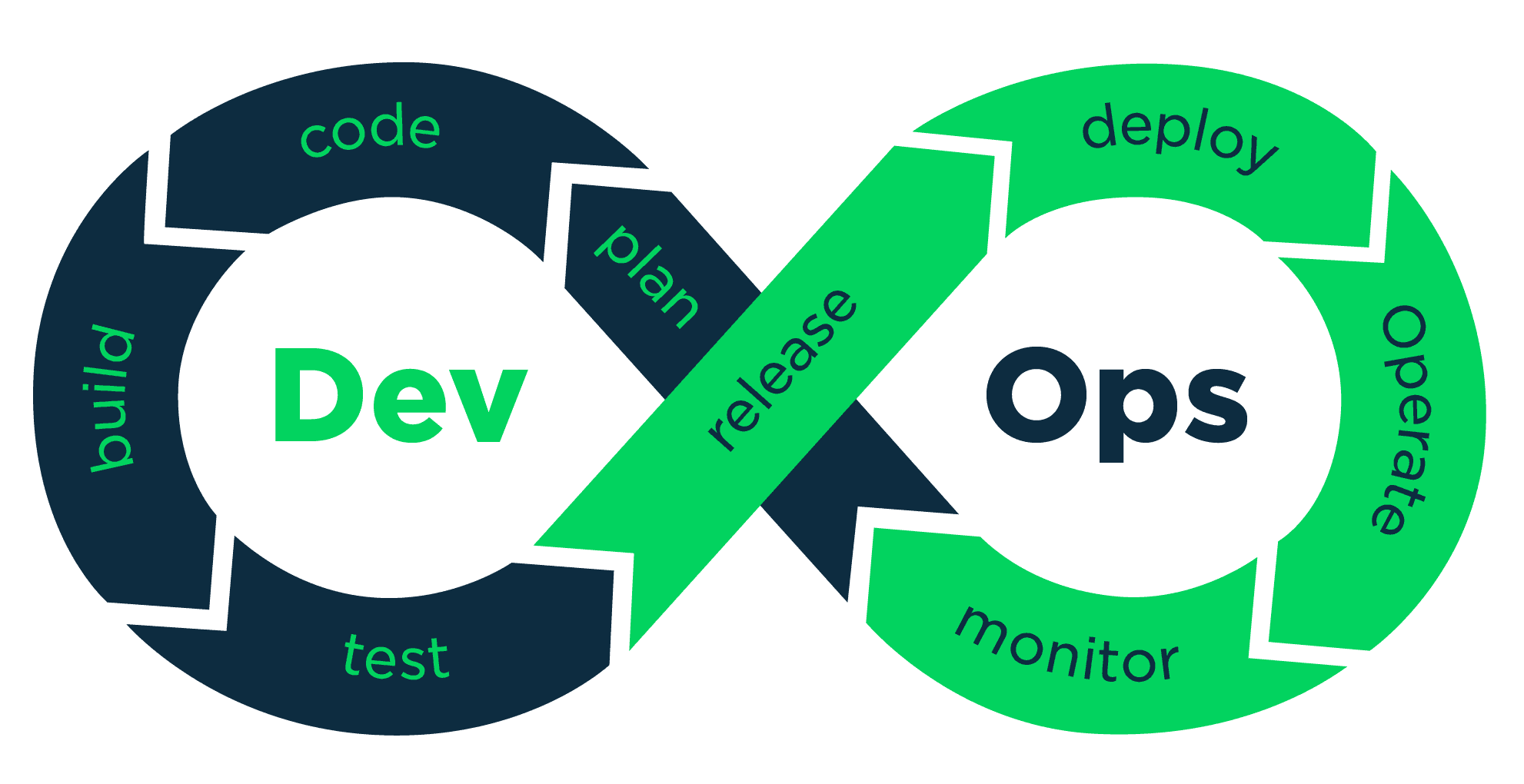

DevOps is the combination of cultural philosophies, practices, and tools that increase an organization’s ability to deliver applications and services at high velocity: evolving and improving products at a faster pace than organizations using traditional software development and infrastructure management processes.

Benefits of DevOps

Speed

Move at a high velocity so you can innovate for customers faster, adapt to changing markets better, and grow more efficient at driving business results. The DevOps model enables your developers and operations teams to achieve these results. For example, microservices and continuous delivery let teams take ownership of services and then release updates to them quicker.

Rapid Delivery

Increase the frequency and pace of releases so you can innovate and improve your product faster. The quicker you can release new features and fix bugs, the faster you can respond to your customers’ needs and build a competitive advantage. Continuous integration and continuous delivery are practices that automate the software release process, from build to deploy.

Reliability

Ensure the quality of application updates and infrastructure changes so you can reliably deliver at a more rapid pace while maintaining a positive experience for end users. Use practices like continuous integration and continuous delivery to test that each change is functional and safe. Monitoring and logging practices help you stay informed of performance in real-time.

Scale

Operate and manage your infrastructure and development processes at scale. Automation and consistency help you manage complex or changing systems efficiently and with reduced risk. For example, infrastructure as code helps you manage your development, testing, and production environments in a repeatable and more efficient manner.

Improved Collaboration

Build more effective teams under a DevOps cultural model, which emphasizes values such as ownership and accountability. Developers and operations teams collaborate closely, share many responsibilities, and combine their workflows. This reduces inefficiencies and saves time (e.g. reduced handover periods between developers and operations, writing code that takes into account the environment in which it is run).

Security

Move quickly while retaining control and preserving compliance. You can adopt a DevOps model without sacrificing security by using automated compliance policies, fine-grained controls, and configuration management techniques. For example, using infrastructure as code and policy as code, you can define and then track compliance at scale.

This speed enables organizations to better serve their customers and compete more effectively in the market.

DevOps Practices

The following are DevOps best practices:

- Continuous Integration

- Continuous Delivery / Continuous Deployment

- Infrastructure as a Code

- Microservices

- Monitoring and Logging

- Communication and Collaboration

Continuous Integration

Developers practicing continuous integration merge their changes back to the main branch as often as possible. The developer's changes are validated by creating a build and running automated tests against the build. By doing so, you avoid the integration hell that usually happens when people wait for release day to merge their changes into the release branch. Continuous integration puts a great emphasis on testing automation to check that the application is not broken whenever new commits are integrated into the main branch.

Continuous Delivery

Continuous delivery is an extension of continuous integration to make sure that you can release new changes to your customers quickly in a sustainable way. This means that on top of having automated your testing, you also have automated your release process and you can deploy your application at any point of time by clicking on a button. In theory, with continuous delivery, you can decide to release daily, weekly, fortnightly, or whatever suits your business requirements. However, if you truly want to get the benefits of continuous delivery, you should deploy to production as early as possible to make sure that you release small batches that are easy to troubleshoot in case of a problem.

Continuous Deployment

Continuous deployment goes one step further than continuous delivery. With this practice, every change that passes all stages of your production pipeline is released to your customers. There's no human intervention, and only a failed test will prevent a new change to be deployed to production. Continuous deployment is an excellent way to accelerate the feedback loop with your customers and take the pressure off the team as there isn't a Release Day anymore. Developers can focus on building software, and they see their work go live minutes after they've finished working on it.

Infrastructure as a Code

Infrastructure as code is a practice in which infrastructure is provisioned and managed using code and software development techniques, such as version control and continuous integration. The cloud’s API-driven model enables developers and system administrators to interact with infrastructure programmatically, and at scale, instead of needing to manually set up and configure resources. Thus, engineers can interface with infrastructure using code-based tools and treat infrastructure in a manner similar to how they treat application code. Because they are defined by code, infrastructure and servers can quickly be deployed using standardized patterns, updated with the latest patches and versions, or duplicated in repeatable ways.

more info visit this Blog article https://www.obytes.com/blog/2018/introduction-to-IAS/

Microservices

Microservices architecture is a design approach to build a single application as a set of small services. Each service runs in its own process and communicates with other services through a well-defined interface using a lightweight mechanism, typically an HTTP-based application programming interface (API). Microservices are built around business capabilities; each service is scoped to a single purpose. You can use different frameworks or programming languages to write microservices and deploy them independently, as a single service, or as a group of services.

Let's apply some of DevOps Practices on AWS

in this Blog we will cover microservices, CI and CD, along with IAAC, using below Tools, Services:

- Terraform

- Tool will be used for Infrastructure as a Code, in order to provision the resources on AWS, and github ... more info please visit https://www.obytes.com/blog/2018/introduction-to-IAS/

- Github

- Platform will be used to host code

- AWS CodeBuild

- AWS CodeBuild is a fully managed integration service that compiles your source code, runs tests, and produces software packages ready for deployment. more info https://aws.amazon.com/codebuild/

- AWS CodePipeline - AWS CodePipeline is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure update more info visit https://aws.amazon.com/codepipeline/ ECS Service - Amazon Elastic Container Service (Amazon ECS) is a highly scalable, high-performance container orchestration service that supports Docker containers and allows you to easily run and scale containerized applications on AWS more info visit https://aws.amazon.com/ecs/

this Tutorial assumes you have already some knowledge on docker + terraform + ecs + AWS the Code source is hosted on a public repo in Obytes org https://github.com/obytes/terraform-example-hello-world

- the code source contains:

- a simple application (built with Django), that return

hello worldmessage - terraform folder contains terraform stuff that prepares and deploys the service to a VPC using aws codebuild a vpc:

for terraform folder we are organizing the project as modules, and providers as recommended by terraform

- each folder under aws module contains an aws service with its dependencies

- each stack under stacks contains a set of Modules

- each provider under providers can use or not stacks to provision resources for that provider

github provider

Let's start with GitHub provider to provision a GitHub repository

under this path Terraform/providers/github reconfigure terraform backend for storing tf state.

create a terraform.tfvars and add your personnal github token or machine token:

$ cat terraform.tfvars

github_token="0xbe0n7vl1q8pleccgbvycg97qu7wnsmq96x24idbeb6qw882q"

then:

$ make plan

Terraform will perform the following actions:

+ github_branch_protection.hello

id: <computed>

branch: "master"

enforce_admins: "false"

etag: <computed>

repository: "hello-world"

+ github_repository.hello

id: <computed>

allow_merge_commit: "true"

allow_rebase_merge: "true"

allow_squash_merge: "true"

archived: "false"

default_branch: <computed>

description: "hello world service"

etag: <computed>

full_name: <computed>

git_clone_url: <computed>

has_downloads: "false"

has_issues: "true"

has_wiki: "true"

html_url: <computed>

http_clone_url: <computed>

name: "hello-world"

private: "false"

ssh_clone_url: <computed>

svn_url: <computed>

topics.#: "3"

topics.2517090172: "tuto"

topics.2786585007: "python"

topics.715589264: "django"

Plan: 2 to add, 0 to change, 0 to destroy.

apply resources that will create a repo on GitHub ...

$ make apply

those Outputs will be used on aws provider to configure CICD

$ make output

org = obytes

github_token = 0xbe0n7vl1q8pleccgbvycg97qu7wnsmq96x24idbeb6qw882q

repo = hello-world

AWS Provider

Let's jump to aws provider since we are done with github provider.

this file Terraform/providers/aws/us-east/qa/stacks.tf

reconfigure terraform s3 backend to store tf files as we did with github.

AWS Stacks

Common Module:

module "common" {

source = "../../../../modules/stacks/common-env"

prefix = "${local.prefix}"

common_tags = "${local.common_tags}"

s3_logging = "${module.common.s3_logging}"

vpc_id = "${module.common.vpc["vpc_id"]}"

cidr_block = "${module.common.vpc["cidr_block"]}"

route_table_ids = [

"${module.common.private_route_table_ids}",

"${module.common.public_route_table_ids}",

security_group_ids = {

default_vpc = "${module.common.default_sg_id}"

kms_arn = "${module.common.kms_arn}"

net_cidr = "${var.net_cidr}"

net_ranges = "${var.net_ranges}"

}

that module will provision Common resources

it will create :

- network components:

- aws vpc

- aws subnets

- routes ...

- a cluster of ECS Fargate Type

- common s3 buckets that will be used for logging, caching, and artifacts ..

hello-service Module

this module will create:

- an ECS task definition that manages the application container

- an ECS service that runs and manages the requested number of tasks, as well as the associated load balancers

- an ECR repository, a registry for docker image of the app

- ALB that route traffic to the application.

- create a aws Codebuild project

as well as will apply CI_CD module Terraform/modules/aws/ecs-svc-pipeline to create deployment Pipeline for the application.

this module uses terraform remote state to get outputs and configure CI/CD

module "hello-service" {

source = "../../../../modules/stacks/hello-service"

prefix = "${local.prefix}"

common_tags = "${local.common_tags}"

fargate_cpu = "1024"

fargate_memory = "2048"

desired_count = "3"

vpc_id = "${module.common.vpc["vpc_id"]}"

public_subnet_ids = "${module.common.public_subnet_ids}"

private_subnet_ids = "${module.common.private_subnet_ids}"

# cluser

ecs = "${module.common.ecs}"

# security & audit

kms_arn = "${module.common.kms_arn}"

# gh

gh_token = "${data.terraform_remote_state.github.token}"

gh_org = "${data.terraform_remote_state.github.organization}"

gh_repo = "${data.terraform_remote_state.github.repo["hello_world"]}"

# s3

s3_artifacts = "${module.common.s3_artifacts}"

s3_logging = "${module.common.s3_logging}"

s3_cache = "${module.common.s3_codebuild_cache}"

pipe_webhook = "${merge(

map("match_equals", "refs/heads/master"),

map("json_path", "$.ref"),

map("secret", "${var.webhook_secret}")

)}"

}

Deployment Strategies

deployment strategies differ from a company to others, depends on their Teams and Agreements, Philosophy ...

here in Obytes we provision mostly Three environments for our clients:

-

Test: This environment is used to test features that we think can break our Pre, and mostly is used by Infrastructure, DevOps Teams to test upgrades, maintenances, Optimizing infrastructure...

- this environment is linked to a test branch, whenever a merge to this branch or push it will trigger an AWS pipeline that pulls and build, test and deploy to ECS cluster

-

Pre: Pre environment, is a copy of the Production environment, Optimized in resources types.. to Reduce Costs

- this environment is linked to master Branch whenever a merge to master, the changes will be deployed to Pre environment, and is not accepting merges from the test branch means that you need to open a PR from your branch with master, this helps us avoiding Commits conflicts, and reduce Engineers development time

-

Pro: Pro is the production environment that will serve platforms to our real clients

- this environment is linked to GitHub tags x.x.x, the developer need to creates a release from GitHub UI from the master branch this will end triggering an aws pipeline.

as we configure for each GitHub repository an aws Codebuild Project, that's triggered based on branches, than master to Build and Test changes

Codebuild then is triggered using GitHub webhook, and codebuild webhook

resource "aws_codebuild_webhook" "this" {

project_name = "${aws_codebuild_project.build.name}"

branch_filter = "^(?!master).*$"

}

resource "github_repository_webhook" "this" {

active = true

events = ["push"]

name = "web"

repository = "${var.gh_repo}"

configuration {

url = "${aws_codebuild_webhook.this.payload_url}"

secret = "${aws_codebuild_webhook.this.secret}"

content_type = "json"

insecure_ssl = false

}

output "codebuild_secret" {

value = "${aws_codebuild_webhook.this.secret}"

}

output "codebuild_url" {

value = "${aws_codebuild_webhook.this.payload_url}"

}

how that works?

this folder Terraform/modules/aws/ecs-svc-pipeline contains :

- Codepipeline configuration with stages and permissions...

- Codepipeline Webhook that trigger the pipeline

- GitHub webhook that invoke Codepipeline webhook after a merge or release GitHub events

webhook resources:

resource "aws_codepipeline_webhook" "gh_webhook" {

name = "${local.prefix}"

authentication = "GITHUB_HMAC"

target_action = "Source"

target_pipeline = "${aws_codepipeline.cd.name}"

authentication_configuration {

secret_token = "${var.pipe_webhook["secret"]}"

filter {

json_path = "${var.pipe_webhook["json_path"]}"

match_equals = "${var.pipe_webhook["match_equals"]}"

}

# Wire the CodePipeline webhook into a GitHub repository.

resource "github_repository_webhook" "gh_webhook" {

repository = "${var.gh_repo}"

name = "web"

configuration {

url = "${aws_codepipeline_webhook.gh_webhook.url}"

content_type = "json"

insecure_ssl = true

secret = "${var.pipe_webhook["secret"]}"

lifecycle {

ignore_changes = ["configuration.secret"]

events = ["${var.common_tags["env"] == "prod" ? "release" : "push"}"]

}

if it's the production environment then trigger on GitHub release events, if it's not then on push events

make sure to generate a random secret for webhook_secret and update tfvars file

pipe_webhook values for:

- Pre:

pipe_webhook = "${merge(

map("match_equals", "refs/heads/master"),

map("json_path", "$.ref"),

map("secret", "${var.webhook_secret}")

)}"

}

- pro:

pipe_webhook = "${merge(

map("match_equals", "published"),

map("json_path", "$.action"),

map("secret", "${var.webhook_secret}")

)}"

Let's recap:

until now we have seen how to:

- write an advanced Infra as a code, organized, and support scale for a large infrastructure

- Continuous Integration using GitHub and AWS Codebuild

- Continuous Deployment of changes, based on Master/Tag strategy, to Pro/Pre using webhooks

for Questions feel free to reach me on [email protected]